Difference between revisions of "Cores"

(→Data Decomposition) |

|||

| (10 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

| − | + | This page lists some of the past activities within the different cores for metrics-driven extreme-scale parallel anisotropic mesh generation. The current focus is on quantum computing and the core thrusts will be posted soon. | |

| − | + | = Parallel Adaptive Mesh Generation = | |

| + | |||

| + | ==Introduction== | ||

| + | <li style="display: inline-block;"> | ||

| + | [[File:Telescopic.png|frameless|right|600px]] | ||

| + | |||

| + | Our long term goal is to achieve extreme-scale adaptive CFD simulations on the | ||

| + | complex, heterogeneous High Performance Computing (HPC) platforms. To achieve this | ||

| + | goal, we proposed in a telescopic approach (see | ||

| + | Figure) [https://crtc.cs.odu.edu/pub/html_output/details.php?&title=Telescopic%20Approach%20for%20Extreme-scale%20Parallel%20Mesh%20Generation%20for%20CFD%20Applications&paper_type=1&from=year]. The telescopic approach is critical in leveraging the | ||

| + | concurrency that exists at multiple levels in anisotropic and adaptive | ||

| + | simulations. At the chip and node levels, the telescopic approach | ||

| + | deploys a Parallel Optimistic (PO) layer and Parallel Data Refinement | ||

| + | (PDR) layer, respectively. Finally, between different nodes the loosely-coupled approach is employed | ||

| + | |||

| + | The requirements for parallel mesh | ||

| + | generation and adaptivity are: | ||

| + | # '''Stability:''' The quality of the mesh generated in parallel must be comparable to that of a mesh generated sequentially. The quality is defined in terms of the shape of the elements (using a chosen location/error-dependent metric), and the number of the elements (fewer is better for the same shape constraint). | ||

| + | # '''Robustness:''' the ability of the software to correctly and efficiently process any input data. Operator intervention into a massively parallel computation is not only highly expensive, but most likely infeasible due to the large number of concurrently processed sub-problems. | ||

| + | # '''Code re-use:''' a modular design of the parallel software that builds upon previously designed sequential meshing code, such that it can be replaced and/or updated with a minimal effort. Code re-use is feasible only if the code satisfies the reproducibility criterion, identified for the first time in this project. However, the experience from this project indicated that the complexity of state-of-the-art codes inhibits their modifications to a degree that their integration with parallel frameworks like PDR becomes impractical. | ||

| + | # '''Scalability:''' the ratio of the time taken by the best sequential implementation to the time taken by the parallel implementation. The speedup is always limited by the inverse of the sequential fraction of the software, and therefore all non-trivial stages of the computation must be parallelized to leverage the current architectures with millions of cores. | ||

| + | # '''Reproducibility:''' Which can be expressed into two forms: | ||

| + | :#''Strong Reproducibility'' requires that the sequential mesh generation code, when executed with the same input, produces identical results under the following modes of execution: | ||

| + | :## continuous without restarts,and | ||

| + | :## with restarts and reconstructions of the internal data structures. | ||

| + | :#''Weak Reproducibility'' requires that the sequential mesh generation code, when executed with the same input, produces results of the same quality under the following modes of execution: | ||

| + | :## continuous without restarts, and | ||

| + | :## with restarts and reconstructions of the internal data structures. | ||

| + | </li> | ||

| + | |||

=== Tightly-coupled approaches === | === Tightly-coupled approaches === | ||

This groups is experimented with two tightly-coupled approaches : | This groups is experimented with two tightly-coupled approaches : | ||

| − | # CDT3D | + | # [[Isotropic Mesh Generation | CDT3D]] |

| − | # Parallel Optimistic Delaunay Mesh Generation for medical images, for details see [ | + | # Parallel Optimistic Delaunay Mesh Generation for medical images, for details see [https://crtc.cs.odu.edu/pub/html_output/details.php?&title=High%20Quality%20Real-Time%20Image-to-Mesh%20Conversion%20for%20Finite%20Element%20Simulations&paper_type=2&from=year] |

=== Partially-coupled approaches === | === Partially-coupled approaches === | ||

| − | ==== | + | :''' Data Decomposition''' |

| + | |||

| + | :# [[PDR.TetGen | Parallel Data Refinement using TetGen ]] | ||

| + | :# [[PDR.AFLR | Parallel Data Refinement using AFLR]] | ||

| + | :# Parallel Data Refinement using Parallel Optimistic Delaunay Mesh Generation for medical images , for details see [https://crtc.cs.odu.edu/pub/html_output/details.php?&title=Scalable%203D%20Hybrid%20Parallel%20Delaunay%20Image-to-Mesh%20Conversion%20Algorithm%20for%20Distributed%20Shared%20Memory%20Architectures&paper_type=2&from=year] and [https://crtc.cs.odu.edu/pub/html_output/details.php?&title=A%20Hybrid%20Parallel%20Delaunay%20Image-to-Mesh%20Conversion%20Algorithm%20Scalable%20on%20Distributed-Memory%20Clusters&paper_type=2&from=year] | ||

| − | |||

| − | |||

| − | |||

| − | + | :''' Domain Decomposition''' | |

| − | + | : [https://crtc.cs.odu.edu/pub/html_output/details.php?&title=Algorithm%20872:%20Parallel%202D%20Constrained%20Delaunay%20Mesh%20Generation&paper_type=2&from=year PCDM] | |

| − | extension to 3D is in progress. | + | :extension to 3D is in progress. |

=== Loosely-coupled approach === | === Loosely-coupled approach === | ||

| − | Loosely coupled using CDT3D | + | [[PREMA.CDT3D | Loosely coupled using CDT3D ]] |

| − | + | = Parallel Runtime Software Systems = | |

| − | + | = Real-time Medical Image Computing = | |

| − | [[ | + | [[Image-to-Mesh Conversion | Real Time Medical Image Computing]] |

Latest revision as of 11:56, 19 August 2022

This page lists some of the past activities within the different cores for metrics-driven extreme-scale parallel anisotropic mesh generation. The current focus is on quantum computing and the core thrusts will be posted soon.

Contents

Parallel Adaptive Mesh Generation

Introduction

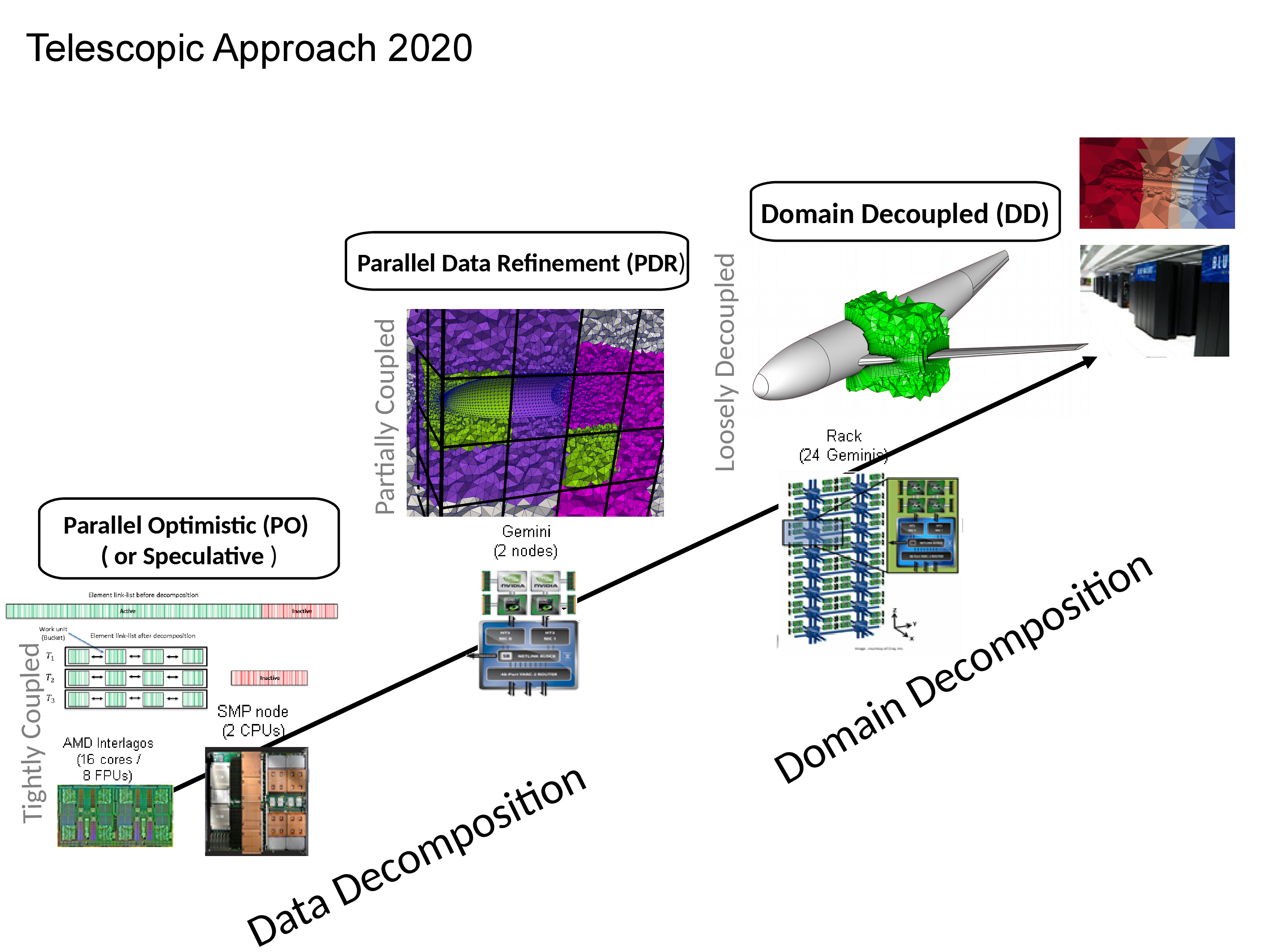

Our long term goal is to achieve extreme-scale adaptive CFD simulations on the complex, heterogeneous High Performance Computing (HPC) platforms. To achieve this goal, we proposed in a telescopic approach (see Figure) [1]. The telescopic approach is critical in leveraging the concurrency that exists at multiple levels in anisotropic and adaptive simulations. At the chip and node levels, the telescopic approach deploys a Parallel Optimistic (PO) layer and Parallel Data Refinement (PDR) layer, respectively. Finally, between different nodes the loosely-coupled approach is employed

The requirements for parallel mesh generation and adaptivity are:

- Stability: The quality of the mesh generated in parallel must be comparable to that of a mesh generated sequentially. The quality is defined in terms of the shape of the elements (using a chosen location/error-dependent metric), and the number of the elements (fewer is better for the same shape constraint).

- Robustness: the ability of the software to correctly and efficiently process any input data. Operator intervention into a massively parallel computation is not only highly expensive, but most likely infeasible due to the large number of concurrently processed sub-problems.

- Code re-use: a modular design of the parallel software that builds upon previously designed sequential meshing code, such that it can be replaced and/or updated with a minimal effort. Code re-use is feasible only if the code satisfies the reproducibility criterion, identified for the first time in this project. However, the experience from this project indicated that the complexity of state-of-the-art codes inhibits their modifications to a degree that their integration with parallel frameworks like PDR becomes impractical.

- Scalability: the ratio of the time taken by the best sequential implementation to the time taken by the parallel implementation. The speedup is always limited by the inverse of the sequential fraction of the software, and therefore all non-trivial stages of the computation must be parallelized to leverage the current architectures with millions of cores.

- Reproducibility: Which can be expressed into two forms:

- Strong Reproducibility requires that the sequential mesh generation code, when executed with the same input, produces identical results under the following modes of execution:

- continuous without restarts,and

- with restarts and reconstructions of the internal data structures.

- Weak Reproducibility requires that the sequential mesh generation code, when executed with the same input, produces results of the same quality under the following modes of execution:

- continuous without restarts, and

- with restarts and reconstructions of the internal data structures.

- Strong Reproducibility requires that the sequential mesh generation code, when executed with the same input, produces identical results under the following modes of execution:

Tightly-coupled approaches

This groups is experimented with two tightly-coupled approaches :

Partially-coupled approaches

- Data Decomposition

- Parallel Data Refinement using TetGen

- Parallel Data Refinement using AFLR

- Parallel Data Refinement using Parallel Optimistic Delaunay Mesh Generation for medical images , for details see [3] and [4]

- Domain Decomposition

- PCDM

- extension to 3D is in progress.