Difference between revisions of "Facilities"

(Created page with "= Introduction = One of the most notable research programs associated with Old Dominion University is the Center for Real Time Computing (CRTC). The purpose of the CRTC is to...") |

(→HPC Wahab Cluster) |

||

| (14 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | = Introduction = | + | == Introduction == |

One of the most notable research programs associated with Old Dominion University is the Center for Real Time Computing (CRTC). The purpose of the CRTC is to pioneer advancements in real-time and large-scale physics-based modeling and simulation computing utilizing quality mesh generation. Since its inception, the CRTC has explored the use of real-time computational technology in Image Guided Therapy, storm surge and beach erosion modeling, and Computational Fluid Dynamics simulations for complex Aerospace applications. The center and its distinguished personnel accomplish their objectives through rigorous theoretical research (which often involves the use of powerful computers) and dynamic collaboration with partners like Harvard Medical School and NASA Langley Research Center in US and Center for Computational Engineering Science (CCES) RWTH Aachen University in Germany and Neurosurgical Department of Huashan Hospital Shanghai Medical College, Fudan University in China. This research is mainly funded from government agencies like ational Science Foundation, National Institute of Health and NASA and philanthropic organizations like John Simon Guggenheim Foundation. | One of the most notable research programs associated with Old Dominion University is the Center for Real Time Computing (CRTC). The purpose of the CRTC is to pioneer advancements in real-time and large-scale physics-based modeling and simulation computing utilizing quality mesh generation. Since its inception, the CRTC has explored the use of real-time computational technology in Image Guided Therapy, storm surge and beach erosion modeling, and Computational Fluid Dynamics simulations for complex Aerospace applications. The center and its distinguished personnel accomplish their objectives through rigorous theoretical research (which often involves the use of powerful computers) and dynamic collaboration with partners like Harvard Medical School and NASA Langley Research Center in US and Center for Computational Engineering Science (CCES) RWTH Aachen University in Germany and Neurosurgical Department of Huashan Hospital Shanghai Medical College, Fudan University in China. This research is mainly funded from government agencies like ational Science Foundation, National Institute of Health and NASA and philanthropic organizations like John Simon Guggenheim Foundation. | ||

---- | ---- | ||

| Line 6: | Line 6: | ||

The CRTC is currently under the direction of Professor Nikos Chrisochoides, who has been the Richard T. Cheng Chair Professor at Old Dominion University since 2010. Dr. Chrisochoides’ work in parallel mesh generation and deformable registration for image guided neurosurgery has received international recognition. The algorithms and software tools that he and his colleagues developed are used in clinical studies around the world with more than 40,000 downloads. He has also received significant funding through the National Science Foundation for his innovative research in parallel mesh generation. | The CRTC is currently under the direction of Professor Nikos Chrisochoides, who has been the Richard T. Cheng Chair Professor at Old Dominion University since 2010. Dr. Chrisochoides’ work in parallel mesh generation and deformable registration for image guided neurosurgery has received international recognition. The algorithms and software tools that he and his colleagues developed are used in clinical studies around the world with more than 40,000 downloads. He has also received significant funding through the National Science Foundation for his innovative research in parallel mesh generation. | ||

<br><br><br><br><br><br><br> | <br><br><br><br><br><br><br> | ||

| − | = CRTC Lab & Resources = | + | == CRTC Lab & Resources == |

To further its mission of fostering research, Old Dominion University has provided the Center for Real Time Computing with lab space in its Engineering and Computational Sciences Building. The CRTC utilizes the lab space and the Department of Computer Science’s other resources to conduct its studies. The principal investigators (PIs) who lead research projects at the CRTC Lab have access to a Dell Precision T7500 workstation, featuring a Dual Six Core Intel Xeon Processor X5690 (total of 12 cores). The processor has a clock speed of 3.46GHz, a cache of 12MB, and QPI speed of 6.4GT/s. The processor also supports up to 96GB of DDR3 ECC SDRAM (6X8GB) at 1333MHz. The system is augmented by the nVIDIA Quadro 6000. With 6 GB of memory, this device provides stunning graphic capabilities. The PIs also have command of an IBM server funded from a NSF MRI award (CNS-0521381), as well as access to the Blacklight system at the Pittsburg Supercomputing Center. | To further its mission of fostering research, Old Dominion University has provided the Center for Real Time Computing with lab space in its Engineering and Computational Sciences Building. The CRTC utilizes the lab space and the Department of Computer Science’s other resources to conduct its studies. The principal investigators (PIs) who lead research projects at the CRTC Lab have access to a Dell Precision T7500 workstation, featuring a Dual Six Core Intel Xeon Processor X5690 (total of 12 cores). The processor has a clock speed of 3.46GHz, a cache of 12MB, and QPI speed of 6.4GT/s. The processor also supports up to 96GB of DDR3 ECC SDRAM (6X8GB) at 1333MHz. The system is augmented by the nVIDIA Quadro 6000. With 6 GB of memory, this device provides stunning graphic capabilities. The PIs also have command of an IBM server funded from a NSF MRI award (CNS-0521381), as well as access to the Blacklight system at the Pittsburg Supercomputing Center. | ||

<br> | <br> | ||

| − | = Community Outreach = | + | == Community Outreach == |

[[File:Lab_Space_Outreach.png|frameless|left]] | [[File:Lab_Space_Outreach.png|frameless|left]] | ||

In addition to research, the lab space and resources of the CRTC may be used for outreach and education activities. Students from the local high school community have visited the lab to view its state-of-the-art equipment and discuss computer science topics with distinguished experts. To continue its outreach to the community, the CRTC will soon make its IBM server available to high school students wishing to gain experience in high performance computing. By granting controlled access of its equipment to interested high school students, the CRTC provides them with an exceptional introduction to computer science work and research, without jeopardizing other research projects. The CRTC also possesses a 3D visualization system, which it uses in its outreach/education programs. This high-quality, interactive system is especially motivating and exciting to high school students stimulated by multi-media. | In addition to research, the lab space and resources of the CRTC may be used for outreach and education activities. Students from the local high school community have visited the lab to view its state-of-the-art equipment and discuss computer science topics with distinguished experts. To continue its outreach to the community, the CRTC will soon make its IBM server available to high school students wishing to gain experience in high performance computing. By granting controlled access of its equipment to interested high school students, the CRTC provides them with an exceptional introduction to computer science work and research, without jeopardizing other research projects. The CRTC also possesses a 3D visualization system, which it uses in its outreach/education programs. This high-quality, interactive system is especially motivating and exciting to high school students stimulated by multi-media. | ||

<br><br><br><br><br> | <br><br><br><br><br> | ||

| − | = Information Technology Services (ITS) = | + | == Information Technology Services (ITS) == |

Old Dominion University maintains a robust, broadband, high-speed communications network and High Performance Computing (HPC) infrastructure. The facility utilizes 3200 square feet of conditioned space to accommodate server, core networking, storage, and computational resources. The data center has 100+ racks deployed in alternating hot and cold aisle configuration. The data center facility is on raised flooring with minimized obstruction to help facilitate optimized air flow. Some of the monitoring software’s being utilized in the operations center are Solarwinds ORION network performance software and Nagios Infrastructure monitoring application. The IT Operations center monitors the stability and availability of about 400 production servers (physical and virtual), close to 400 network infrastructure switching and routing devices, enterprise storage, and high performance computing resources. | Old Dominion University maintains a robust, broadband, high-speed communications network and High Performance Computing (HPC) infrastructure. The facility utilizes 3200 square feet of conditioned space to accommodate server, core networking, storage, and computational resources. The data center has 100+ racks deployed in alternating hot and cold aisle configuration. The data center facility is on raised flooring with minimized obstruction to help facilitate optimized air flow. Some of the monitoring software’s being utilized in the operations center are Solarwinds ORION network performance software and Nagios Infrastructure monitoring application. The IT Operations center monitors the stability and availability of about 400 production servers (physical and virtual), close to 400 network infrastructure switching and routing devices, enterprise storage, and high performance computing resources. | ||

The network is currently comprised of a meshed Ten Gigabit Ethernet backbone supporting voice, data and video with switched 10Gbps connections to the servers and 1Gbps connections to the desktops. Inter-building network connectivity consists of redundant fiber optic data channels yielding high-speed Gigabit connectivity, with Ten-Gigabit connectivity for key building on campus. Ongoing upgrades to Inter-building networks will result in data speeds of 10Gbps for the entire campus. ITS currently provides a variety of Internet services, including 1Gbps connection to Cox communication, 2Gbps connection to Cogent. Connections to Internet2 and Cogent are over a private DWDM regional optical network infrastructure, with redundant 10Gbps links to MARIA aggregation nodes in Ashburn, Virginia and Atlanta, Georgia. The DWDM infrastructure project named ELITE (Eastern Lightwave Internetworking Technology Enterprise) provides access not only to the commodity Internet but gateways to other national networks to include the Energy Science Network and Internet2. | The network is currently comprised of a meshed Ten Gigabit Ethernet backbone supporting voice, data and video with switched 10Gbps connections to the servers and 1Gbps connections to the desktops. Inter-building network connectivity consists of redundant fiber optic data channels yielding high-speed Gigabit connectivity, with Ten-Gigabit connectivity for key building on campus. Ongoing upgrades to Inter-building networks will result in data speeds of 10Gbps for the entire campus. ITS currently provides a variety of Internet services, including 1Gbps connection to Cox communication, 2Gbps connection to Cogent. Connections to Internet2 and Cogent are over a private DWDM regional optical network infrastructure, with redundant 10Gbps links to MARIA aggregation nodes in Ashburn, Virginia and Atlanta, Georgia. The DWDM infrastructure project named ELITE (Eastern Lightwave Internetworking Technology Enterprise) provides access not only to the commodity Internet but gateways to other national networks to include the Energy Science Network and Internet2. | ||

| − | = HPC | + | == HPC Wahab Cluster == |

| − | The | + | Wahab is a reconfigurable HPC cluster based on OpenStack architecture to support several types of computational research workloads. The Wahab cluster consists of 158 compute nodes and 6320 computational cores using Intel’s “Skylake” Xeon Gold 6148 processors (20 CPU cores per chip; 40 cores per node). Each compute node has 384 GB of RAM, and 18 accelerator compute nodes, each of which is equipped with four NVIDIA’s V100 graphical processing units (GPU). A 100Gbps EDR Infiniband high-speed interconnect provides low-latency, high-bandwidth communication between nodes to support massively parallel computing as well as data-intensive workloads. Wahab is equipped with a dedicated high-performance Lustre scratch storage (350 TB usable capacity) and is connected to the 1.2 PB university-wide home/long-term research data networked filesystem. The Wahab cluster also contains 45 TB of storage blocks that can be provisioned for user data in the virtual environment. The relative proportion of these resources can be adjusted depending on the needs of the research community. |

| − | Below are the specifications of the Turing cluster as of March, | + | Below are the specifications of the Wahab cluster as of March, 2020: |

| + | {| class="wikitable" | ||

| + | | <b>Node Type</b> | ||

| + | | <b>Total Available Nodes</b> | ||

| + | | <b>Maximum Slots (Cores) per node</b> | ||

| + | | <b>Additional Resource</b> | ||

| + | | <b>Memory (RAM) per node</b> | ||

| + | |- | ||

| + | | Standard Compute | ||

| + | | 158 | ||

| + | | 40 | ||

| + | | none | ||

| + | | 384 GB | ||

| + | |- | ||

| + | | GPU | ||

| + | | 18 | ||

| + | | 28 - 32 | ||

| + | | Nvidia V100 GPU | ||

| + | | 128 GB | ||

| + | |} | ||

| + | |||

| + | More details can be found [https://docs.hpc.odu.edu/#wahab-hpc-cluster here] | ||

| + | |||

| + | == HPC Turing Cluster == | ||

| + | <li style="display: inline-block;"> | ||

| + | [[File: Turing.png|thumb|left|250px| '''Turing Cluster''']] | ||

| + | The Turing cluster has been the primary shared high-performance computing (HPC) cluster on campus since 2013. Turing is based on 64-bit Intel Xeon microprocessor architectures, and each node has up to 32 cores and at least 128 GB of memory. As of May 2017, Turing cluster has 6300 cores available to researchers for computational needs. Researchers have access to several high memory nodes (512–768 GB), nodes with NVIDIA graphical processing units (GPUs) of varying generation: K40, K80, P100, as well as the state-of-the-art V100 (Volta). There is a total of 33 GPUs in Turing. FDR-based (56 Gbps) Infiniband fabric provides the high-speed network for the cluster’s inter-communication. Turing cluster has redundant head nodes for increased reliability and a dedicated login node. EMC’s Isilon storage (1.3 PB total capacity) serves as the home and long-term mass research data storage. In addition, a 180 TB Lustre high-speed parallel filesystem is provided for scratch space. The University supports research computing with parallel computing using MPI and OpenMP protocols on compute cluster architectures with shared memory and symmetric multiprocessing compute nodes. Researchers have access to high memory nodes and nodes with Xeon Phi co-processors. FDR based infiniband infrastructure provides the communication path for the cluster inter communication. Mass storage is integrated in this cluster at 20Gbps and scratch space is accessible over FDR based infiniband infrastructure. Turing cluster has redundant head nodes and login nodes for increased reliability. The Turing cluster is primarily used by faculty members who are conducting research using software such as Ansys, Comsol, R, Mathematics, and Matlab among other software’s. Integrated in Turing cluster is a number of GPU nodes with NVidia Tesla M2090 GPU’s, to help facilitate computation that requires graphic processors. | ||

| + | </li> | ||

| + | Below are the specifications of the Turing cluster as of March, 2020: | ||

{| class="wikitable" | {| class="wikitable" | ||

| <b>Node Type</b> | | <b>Node Type</b> | ||

| Line 56: | Line 84: | ||

|} | |} | ||

| − | More details can be found [https:// | + | More details can be found [https://docs.hpc.odu.edu/#hardware-resources here] |

| + | |||

| + | EMC’s Isilon storage is the primary storage platform for the high-performance computing environment. The storage environment provides home and mass storage for the HPC environment with a total capacity of over 1 PB. The storage platform provides scale out NAS storage that delivers increased performance for file based data applications and workflows. In addition EMC’s VNX storage platform is the primary storage environment on campus for virtualized server environments as well as campus data enterprise shares. EMC’s VNX platform is a tiered, scalable storage environment for file, block and object storage. This storage solution is deployed in the enterprise data center with the associated controller, disk, network and power redundancy. | ||

| + | |||

| + | Data Center HVAC Solution consist of has three (3) 30 Ton HVAC units deployed in an N+1 redundancy deployment. Racks of server and computational hardware are arranged in alternating hot and cold aisle configuration. The HVAC units are deployed on a raised floor arrangement with perforated tiles in the cold aisles which allows for superior environmental controls and maintaining the data center at the desired and optimal temperature levels. Optimized performance of chillers in data center is critical for environment control and for this reason the main data center has a 45 Ton chiller installed to facilitate ventilation and air conditioning. In addition ITS has an additional fourteen (14) above the rack cooling units complement the main HVAC units. These above the rack cooling units do not take any additional rack space in the data center. These units are designed to draw hot air from the computational equipment racks and hot aisles and then dissipate conditioned cold air down the cold aisle. This solution provides for an energy efficient cooling solution with zero floor space requirements. | ||

| + | |||

| + | == HPC Hadoop Cluster == | ||

| + | The six-node Hadoop cluster is dedicated for big data analytics. Each of the six data nodes is equipped with 1.3 TB solid-state disk (SSD) and 128 GB of RAM for maximum processing performance. Software such as Hadoop MapReduce and Spark are available for research uses on this cluster. | ||

| + | |||

| + | == Network Communication Infrastructure == | ||

| + | |||

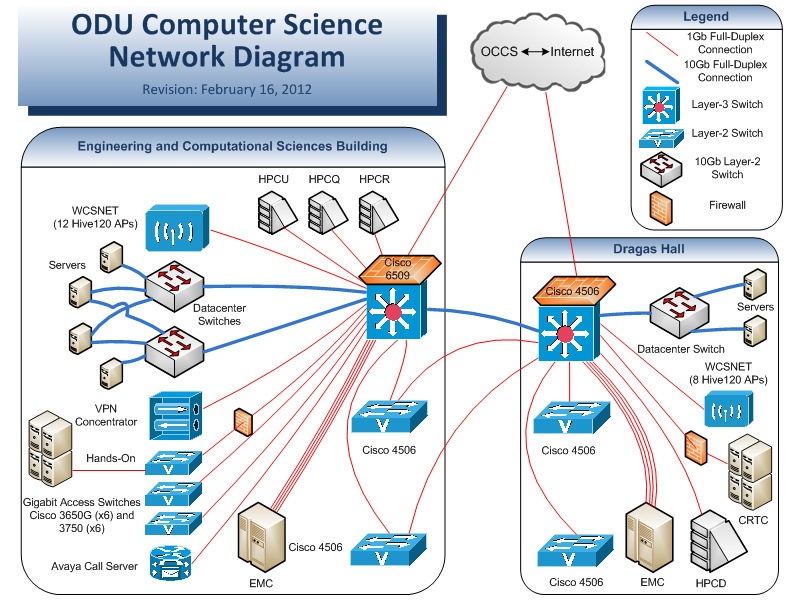

| + | [[File: Odunetwork.png |frameless|right|350px]] | ||

| + | Old Dominion University network communication infrastructure is designed using the state of the art networking and switching hardware platforms. The campus infrastructure backbone is fully redundant and capable of 10Gbps data rates between all distribution modules. The data center infrastructure is designed to operate at 40Gbps data rates between the server and storage platforms. Various VLANs are used to segment the network, isolate traffic, and enforce security policies. Our 100% wireless coverage allows users to take advantage of A, B, G, and N secure connections from either of our buildings. VPN access is available for remote users to access services on our network. All departmental telephone communication is provided via VoIP Avaya phone systems. | ||

| + | |||

| + | We offer a heterogeneous computing environment that primarily consists of Windows and *nix based workstations and servers. On the Windows domain, users are offered network logons, Exchange email, terminal services via our Virtual Computing Lab (VCLab) where users can have access to our software remotely, roaming profiles, MSSQL database access for research, and Hyper-V virtualization for research/faculty projects. For Unix and Linux users we support Solaris, Ubuntu and Red Hat Enterprise Linux (RHEL) distributions. Our *nix services include DNS, NIS, Unix mail, access to personal MySQL databases, class and research project Oracle databases, and both Linux and Unix based FAST aliases for secure shell sessions. In addition to the standard *nix services, High Performance Computing resources are offered to users in the form of multiple Intel-based Rocks HPC clusters, which boast high-speed Infiniband QDR interconnects and top out at a combined 3.5 TFLOPS. A Beowulf cluster is available for use in distributed computing classes. We also offer several GPU servers utilizing the newest CUDA paradigms, and a virtual Symmetric Multi-Processor (SMP) server with 64 physical cores and 512Gb of memory. | ||

| + | Storage for a majority of these resources is redundantly provided via two EMC Celerra NAS devices, one located in each datacenter. This design allows for replication of storage across the network to ensure high availability. These systems provide a combined total of 100Tb of storage and dozens of file systems. Users are provided with CIFS and NFS mounts for use in both windows and *nix environments. We also use these devices to provide iSCSI targets for our VM environments. Research users are allocated storage based on project needs and availability. All user data is backed up multiple times per day as snapshots on our EMC devices and maintained onsite for up to two years on tape. | ||

| + | Additional services provided include, but are not limited to, user web pages, on-demand virtual machines through our Cloud services, copy and print services, audio-visual broadcasting and recording, teleconferencing, and 24/7 end user helpdesk and support. | ||

| + | |||

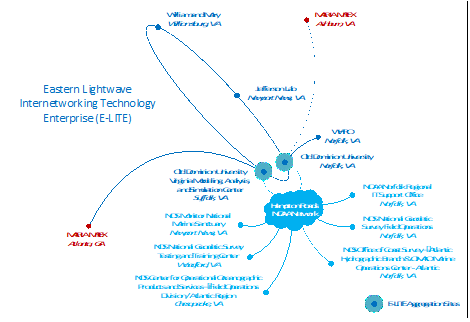

| + | [[File: E-LITE.png |thumb|left|350px|'''Diagram of E-LITE regional network serving the Southeastern Virginia universities and research institutions''']] | ||

| + | DWDM E-LITE Infrastructure Old Dominion University manages the Eastern Lightwave Integrated Technology Enterprise (E-LITE) infrastructure, which provides 10Gbps connectivity to a number of regional institutions to include the College of William & Mary, Jefferson Lab, Old Dominion University, and the Virginia Modeling, Analysis, and Simulation Center (VMASC). E-LITE infrastructure is designed in a physical ring around the Hampton Roads area providing protected 10Gbps connectivity between the member sites and other national networks like MARIA, Energy Science Network and Internet2. E-LITE network and connectivity to MARIA is being redesigned to upgrade the local DWDM ring to be 100Gbps capable as well as establishment of 100Gbps connection to Internet2. Old Dominion University recently completed a major upgrade on the core server distribution to integrate Nexus 7000 hardware. Nexus 7000 platforms are Cisco Systems next generation switching platforms that are designed for the data center to provide virtualized hardware, in-service upgrades, higher 10Gbps and 40Gbps density, higher performance and reliability. These platforms also provide capability to integrate 100Gbps interfaces in the data center infrastructure as needed. Cisco Nexus platforms include 7000 and 5000 series that provide a higher bandwidth and reliable backbone infrastructure for critical services using technologies such as virtual port channels. | ||

| + | |||

| + | Data Center UPS Batteries for HPC and Network infrastructure consist of a (uninterrupted power supply) UPS system rated at 375KWatts. This unit allows for considerable capacity needed for switching between commercial electrical power and dedicated building power generator. The current UPS system utilizes high performance insulated gate bipolar transistors to provide for larger power capabilities, high speed switching and lower control power consumption. | ||

| + | |||

| + | Campus Virtualized Network Infrastructure. The virtualized network infrastructure supports the unique requirements of University business operations, research, scholarly activities, and online course delivery. Course delivery technologies include video streaming and video conferencing. The Campus Network Virtualization is an initiative that was implemented in the campus environment to make sure we enable our network infrastructure to provide the following features: (i) Communities of interests (Virtual Networks). This will allow us to create network based user communities that have the same functions and communication/application needs. This is being accomplished by using MPLS technology. (ii) High performance and redundant security infrastructure. Security is an important part of any network infrastructure. We have to ensure that users are able to perform all their needed tasks on the network while at the same time have the best possible security protection in place. (iii) Flexibility to provision independent network infrastructures. This feature allows us to create smaller independent logical networks on the existing physical infrastructure. This is of great benefit in a research institution of ODU’s stature and will allow us to work with researchers to provide them the needed resources for their success. | ||

Latest revision as of 19:03, 10 March 2020

Contents

Introduction

One of the most notable research programs associated with Old Dominion University is the Center for Real Time Computing (CRTC). The purpose of the CRTC is to pioneer advancements in real-time and large-scale physics-based modeling and simulation computing utilizing quality mesh generation. Since its inception, the CRTC has explored the use of real-time computational technology in Image Guided Therapy, storm surge and beach erosion modeling, and Computational Fluid Dynamics simulations for complex Aerospace applications. The center and its distinguished personnel accomplish their objectives through rigorous theoretical research (which often involves the use of powerful computers) and dynamic collaboration with partners like Harvard Medical School and NASA Langley Research Center in US and Center for Computational Engineering Science (CCES) RWTH Aachen University in Germany and Neurosurgical Department of Huashan Hospital Shanghai Medical College, Fudan University in China. This research is mainly funded from government agencies like ational Science Foundation, National Institute of Health and NASA and philanthropic organizations like John Simon Guggenheim Foundation.

The CRTC is currently under the direction of Professor Nikos Chrisochoides, who has been the Richard T. Cheng Chair Professor at Old Dominion University since 2010. Dr. Chrisochoides’ work in parallel mesh generation and deformable registration for image guided neurosurgery has received international recognition. The algorithms and software tools that he and his colleagues developed are used in clinical studies around the world with more than 40,000 downloads. He has also received significant funding through the National Science Foundation for his innovative research in parallel mesh generation.

CRTC Lab & Resources

To further its mission of fostering research, Old Dominion University has provided the Center for Real Time Computing with lab space in its Engineering and Computational Sciences Building. The CRTC utilizes the lab space and the Department of Computer Science’s other resources to conduct its studies. The principal investigators (PIs) who lead research projects at the CRTC Lab have access to a Dell Precision T7500 workstation, featuring a Dual Six Core Intel Xeon Processor X5690 (total of 12 cores). The processor has a clock speed of 3.46GHz, a cache of 12MB, and QPI speed of 6.4GT/s. The processor also supports up to 96GB of DDR3 ECC SDRAM (6X8GB) at 1333MHz. The system is augmented by the nVIDIA Quadro 6000. With 6 GB of memory, this device provides stunning graphic capabilities. The PIs also have command of an IBM server funded from a NSF MRI award (CNS-0521381), as well as access to the Blacklight system at the Pittsburg Supercomputing Center.

Community Outreach

In addition to research, the lab space and resources of the CRTC may be used for outreach and education activities. Students from the local high school community have visited the lab to view its state-of-the-art equipment and discuss computer science topics with distinguished experts. To continue its outreach to the community, the CRTC will soon make its IBM server available to high school students wishing to gain experience in high performance computing. By granting controlled access of its equipment to interested high school students, the CRTC provides them with an exceptional introduction to computer science work and research, without jeopardizing other research projects. The CRTC also possesses a 3D visualization system, which it uses in its outreach/education programs. This high-quality, interactive system is especially motivating and exciting to high school students stimulated by multi-media.

Information Technology Services (ITS)

Old Dominion University maintains a robust, broadband, high-speed communications network and High Performance Computing (HPC) infrastructure. The facility utilizes 3200 square feet of conditioned space to accommodate server, core networking, storage, and computational resources. The data center has 100+ racks deployed in alternating hot and cold aisle configuration. The data center facility is on raised flooring with minimized obstruction to help facilitate optimized air flow. Some of the monitoring software’s being utilized in the operations center are Solarwinds ORION network performance software and Nagios Infrastructure monitoring application. The IT Operations center monitors the stability and availability of about 400 production servers (physical and virtual), close to 400 network infrastructure switching and routing devices, enterprise storage, and high performance computing resources.

The network is currently comprised of a meshed Ten Gigabit Ethernet backbone supporting voice, data and video with switched 10Gbps connections to the servers and 1Gbps connections to the desktops. Inter-building network connectivity consists of redundant fiber optic data channels yielding high-speed Gigabit connectivity, with Ten-Gigabit connectivity for key building on campus. Ongoing upgrades to Inter-building networks will result in data speeds of 10Gbps for the entire campus. ITS currently provides a variety of Internet services, including 1Gbps connection to Cox communication, 2Gbps connection to Cogent. Connections to Internet2 and Cogent are over a private DWDM regional optical network infrastructure, with redundant 10Gbps links to MARIA aggregation nodes in Ashburn, Virginia and Atlanta, Georgia. The DWDM infrastructure project named ELITE (Eastern Lightwave Internetworking Technology Enterprise) provides access not only to the commodity Internet but gateways to other national networks to include the Energy Science Network and Internet2.

HPC Wahab Cluster

Wahab is a reconfigurable HPC cluster based on OpenStack architecture to support several types of computational research workloads. The Wahab cluster consists of 158 compute nodes and 6320 computational cores using Intel’s “Skylake” Xeon Gold 6148 processors (20 CPU cores per chip; 40 cores per node). Each compute node has 384 GB of RAM, and 18 accelerator compute nodes, each of which is equipped with four NVIDIA’s V100 graphical processing units (GPU). A 100Gbps EDR Infiniband high-speed interconnect provides low-latency, high-bandwidth communication between nodes to support massively parallel computing as well as data-intensive workloads. Wahab is equipped with a dedicated high-performance Lustre scratch storage (350 TB usable capacity) and is connected to the 1.2 PB university-wide home/long-term research data networked filesystem. The Wahab cluster also contains 45 TB of storage blocks that can be provisioned for user data in the virtual environment. The relative proportion of these resources can be adjusted depending on the needs of the research community.

Below are the specifications of the Wahab cluster as of March, 2020:

| Node Type | Total Available Nodes | Maximum Slots (Cores) per node | Additional Resource | Memory (RAM) per node |

| Standard Compute | 158 | 40 | none | 384 GB |

| GPU | 18 | 28 - 32 | Nvidia V100 GPU | 128 GB |

More details can be found here

HPC Turing Cluster

The Turing cluster has been the primary shared high-performance computing (HPC) cluster on campus since 2013. Turing is based on 64-bit Intel Xeon microprocessor architectures, and each node has up to 32 cores and at least 128 GB of memory. As of May 2017, Turing cluster has 6300 cores available to researchers for computational needs. Researchers have access to several high memory nodes (512–768 GB), nodes with NVIDIA graphical processing units (GPUs) of varying generation: K40, K80, P100, as well as the state-of-the-art V100 (Volta). There is a total of 33 GPUs in Turing. FDR-based (56 Gbps) Infiniband fabric provides the high-speed network for the cluster’s inter-communication. Turing cluster has redundant head nodes for increased reliability and a dedicated login node. EMC’s Isilon storage (1.3 PB total capacity) serves as the home and long-term mass research data storage. In addition, a 180 TB Lustre high-speed parallel filesystem is provided for scratch space. The University supports research computing with parallel computing using MPI and OpenMP protocols on compute cluster architectures with shared memory and symmetric multiprocessing compute nodes. Researchers have access to high memory nodes and nodes with Xeon Phi co-processors. FDR based infiniband infrastructure provides the communication path for the cluster inter communication. Mass storage is integrated in this cluster at 20Gbps and scratch space is accessible over FDR based infiniband infrastructure. Turing cluster has redundant head nodes and login nodes for increased reliability. The Turing cluster is primarily used by faculty members who are conducting research using software such as Ansys, Comsol, R, Mathematics, and Matlab among other software’s. Integrated in Turing cluster is a number of GPU nodes with NVidia Tesla M2090 GPU’s, to help facilitate computation that requires graphic processors.

Below are the specifications of the Turing cluster as of March, 2020:

| Node Type | Total Available Nodes | Maximum Slots (Cores) per node | Additional Resource | Memory (RAM) per node |

| Standard Compute | 220 | 16 - 32 | none | 128 GB |

| GPU | 21 | 28 - 32 | Nvidia K40, K80, P100, V100 GPU(s) | 128 GB |

| Xeon Phi | 10 | 20 | Intel 2250 Phi MICs | 128 GB |

| High Memory | 7 | 32 | none | 512 GB - 768 GB |

More details can be found here

EMC’s Isilon storage is the primary storage platform for the high-performance computing environment. The storage environment provides home and mass storage for the HPC environment with a total capacity of over 1 PB. The storage platform provides scale out NAS storage that delivers increased performance for file based data applications and workflows. In addition EMC’s VNX storage platform is the primary storage environment on campus for virtualized server environments as well as campus data enterprise shares. EMC’s VNX platform is a tiered, scalable storage environment for file, block and object storage. This storage solution is deployed in the enterprise data center with the associated controller, disk, network and power redundancy.

Data Center HVAC Solution consist of has three (3) 30 Ton HVAC units deployed in an N+1 redundancy deployment. Racks of server and computational hardware are arranged in alternating hot and cold aisle configuration. The HVAC units are deployed on a raised floor arrangement with perforated tiles in the cold aisles which allows for superior environmental controls and maintaining the data center at the desired and optimal temperature levels. Optimized performance of chillers in data center is critical for environment control and for this reason the main data center has a 45 Ton chiller installed to facilitate ventilation and air conditioning. In addition ITS has an additional fourteen (14) above the rack cooling units complement the main HVAC units. These above the rack cooling units do not take any additional rack space in the data center. These units are designed to draw hot air from the computational equipment racks and hot aisles and then dissipate conditioned cold air down the cold aisle. This solution provides for an energy efficient cooling solution with zero floor space requirements.

HPC Hadoop Cluster

The six-node Hadoop cluster is dedicated for big data analytics. Each of the six data nodes is equipped with 1.3 TB solid-state disk (SSD) and 128 GB of RAM for maximum processing performance. Software such as Hadoop MapReduce and Spark are available for research uses on this cluster.

Network Communication Infrastructure

Old Dominion University network communication infrastructure is designed using the state of the art networking and switching hardware platforms. The campus infrastructure backbone is fully redundant and capable of 10Gbps data rates between all distribution modules. The data center infrastructure is designed to operate at 40Gbps data rates between the server and storage platforms. Various VLANs are used to segment the network, isolate traffic, and enforce security policies. Our 100% wireless coverage allows users to take advantage of A, B, G, and N secure connections from either of our buildings. VPN access is available for remote users to access services on our network. All departmental telephone communication is provided via VoIP Avaya phone systems.

We offer a heterogeneous computing environment that primarily consists of Windows and *nix based workstations and servers. On the Windows domain, users are offered network logons, Exchange email, terminal services via our Virtual Computing Lab (VCLab) where users can have access to our software remotely, roaming profiles, MSSQL database access for research, and Hyper-V virtualization for research/faculty projects. For Unix and Linux users we support Solaris, Ubuntu and Red Hat Enterprise Linux (RHEL) distributions. Our *nix services include DNS, NIS, Unix mail, access to personal MySQL databases, class and research project Oracle databases, and both Linux and Unix based FAST aliases for secure shell sessions. In addition to the standard *nix services, High Performance Computing resources are offered to users in the form of multiple Intel-based Rocks HPC clusters, which boast high-speed Infiniband QDR interconnects and top out at a combined 3.5 TFLOPS. A Beowulf cluster is available for use in distributed computing classes. We also offer several GPU servers utilizing the newest CUDA paradigms, and a virtual Symmetric Multi-Processor (SMP) server with 64 physical cores and 512Gb of memory. Storage for a majority of these resources is redundantly provided via two EMC Celerra NAS devices, one located in each datacenter. This design allows for replication of storage across the network to ensure high availability. These systems provide a combined total of 100Tb of storage and dozens of file systems. Users are provided with CIFS and NFS mounts for use in both windows and *nix environments. We also use these devices to provide iSCSI targets for our VM environments. Research users are allocated storage based on project needs and availability. All user data is backed up multiple times per day as snapshots on our EMC devices and maintained onsite for up to two years on tape. Additional services provided include, but are not limited to, user web pages, on-demand virtual machines through our Cloud services, copy and print services, audio-visual broadcasting and recording, teleconferencing, and 24/7 end user helpdesk and support.

DWDM E-LITE Infrastructure Old Dominion University manages the Eastern Lightwave Integrated Technology Enterprise (E-LITE) infrastructure, which provides 10Gbps connectivity to a number of regional institutions to include the College of William & Mary, Jefferson Lab, Old Dominion University, and the Virginia Modeling, Analysis, and Simulation Center (VMASC). E-LITE infrastructure is designed in a physical ring around the Hampton Roads area providing protected 10Gbps connectivity between the member sites and other national networks like MARIA, Energy Science Network and Internet2. E-LITE network and connectivity to MARIA is being redesigned to upgrade the local DWDM ring to be 100Gbps capable as well as establishment of 100Gbps connection to Internet2. Old Dominion University recently completed a major upgrade on the core server distribution to integrate Nexus 7000 hardware. Nexus 7000 platforms are Cisco Systems next generation switching platforms that are designed for the data center to provide virtualized hardware, in-service upgrades, higher 10Gbps and 40Gbps density, higher performance and reliability. These platforms also provide capability to integrate 100Gbps interfaces in the data center infrastructure as needed. Cisco Nexus platforms include 7000 and 5000 series that provide a higher bandwidth and reliable backbone infrastructure for critical services using technologies such as virtual port channels.

Data Center UPS Batteries for HPC and Network infrastructure consist of a (uninterrupted power supply) UPS system rated at 375KWatts. This unit allows for considerable capacity needed for switching between commercial electrical power and dedicated building power generator. The current UPS system utilizes high performance insulated gate bipolar transistors to provide for larger power capabilities, high speed switching and lower control power consumption.

Campus Virtualized Network Infrastructure. The virtualized network infrastructure supports the unique requirements of University business operations, research, scholarly activities, and online course delivery. Course delivery technologies include video streaming and video conferencing. The Campus Network Virtualization is an initiative that was implemented in the campus environment to make sure we enable our network infrastructure to provide the following features: (i) Communities of interests (Virtual Networks). This will allow us to create network based user communities that have the same functions and communication/application needs. This is being accomplished by using MPLS technology. (ii) High performance and redundant security infrastructure. Security is an important part of any network infrastructure. We have to ensure that users are able to perform all their needed tasks on the network while at the same time have the best possible security protection in place. (iii) Flexibility to provision independent network infrastructures. This feature allows us to create smaller independent logical networks on the existing physical infrastructure. This is of great benefit in a research institution of ODU’s stature and will allow us to work with researchers to provide them the needed resources for their success.