Difference between revisions of "PDR.PODM Distributed Memory"

Pthomadakis (talk | contribs) (→Delta 0.3780) |

Pthomadakis (talk | contribs) (→Latest Results) |

||

| (11 intermediate revisions by the same user not shown) | |||

| Line 327: | Line 327: | ||

| − | [[File:PDR PODM Histogram Time 15.png| 700px]] | + | [[File:PDR PODM Histogram Time small_delta 15.png| 700px]] |

| − | [[File:PDR PODM Histogram Tasks 15.png| 700px]] | + | [[File:PDR PODM Histogram Tasks small_delta 15.png| 700px]] |

| − | [[File:PDR PODM Time Break Down 15.png| 700px]] | + | [[File:PDR PODM Time Break Down small_delta 15.png| 700px]] |

</div> | </div> | ||

| + | |||

| + | |||

| + | === Latest Results === | ||

| + | |||

| + | {| class="wikitable" | ||

| + | |+ Shared MemoryTimes | ||

| + | |- | ||

| + | ! Cores !! Total Time (s) !! Number of Elements | ||

| + | |- | ||

| + | | 40|| 90.8 || 49453719 | ||

| + | |- | ||

| + | |} | ||

| + | |||

| + | |||

| + | |||

| + | {| class="wikitable" | ||

| + | |+ MPI Times | ||

| + | |- | ||

| + | ! !! colspan=2 | MPI !! colspan=2 | PREMA | ||

| + | |- | ||

| + | ! Cores !! Total Time (s) !! Number of Elements !! Total Time (s) !! Number of Elements | ||

| + | |- | ||

| + | | 100 || 1151.472406|| 49352359 || 208.746450 || 49347855 | ||

| + | |- | ||

| + | | 200 || 763.70671 || 49357898 || 121.326012 || 49353442 | ||

| + | |- | ||

| + | | 300 || 537.678638 || 49357092 || 105.812248 || 49351224 | ||

| + | |- | ||

| + | | 400 || 490.365970 || 49357881 || 93.101481 || 49351626 | ||

| + | |- | ||

| + | | 500 || 434.921334 || 49347357 || 93.119187 || 49351049 | ||

| + | |- | ||

| + | | 600 || 466.822803 || 49361647 || 96.243807 || 49345030 | ||

| + | |- | ||

| + | | 700 || 425.273205 || 49360615 || 97.721654 || 49346798 | ||

| + | |- | ||

| + | | 800 || 434.638603 || 49349629 || 96.723318 || 49341034 | ||

| + | |- | ||

| + | |} | ||

| + | |||

| + | [[File:PDR.png]] | ||

Latest revision as of 14:49, 19 May 2023

Contents

Issues

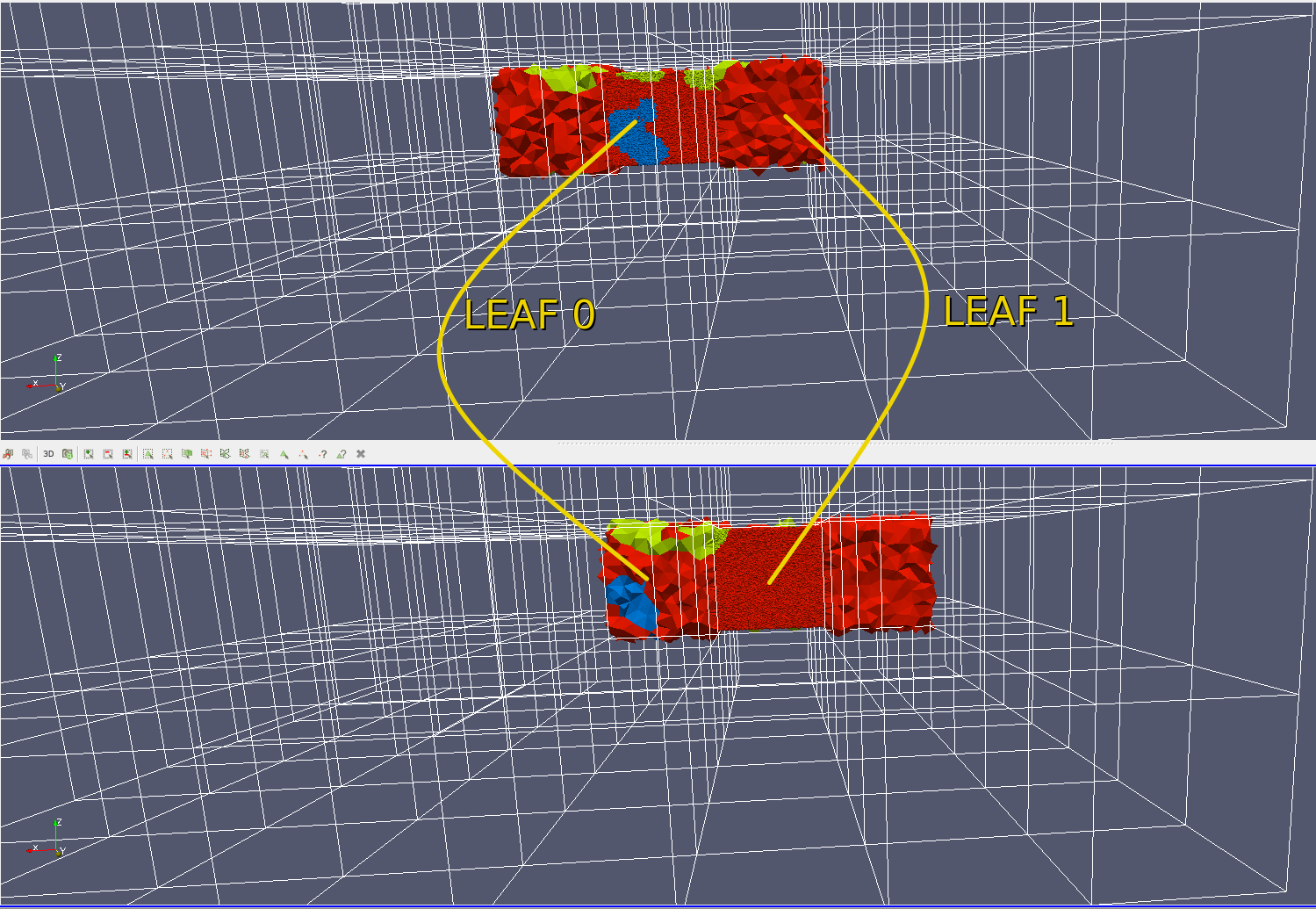

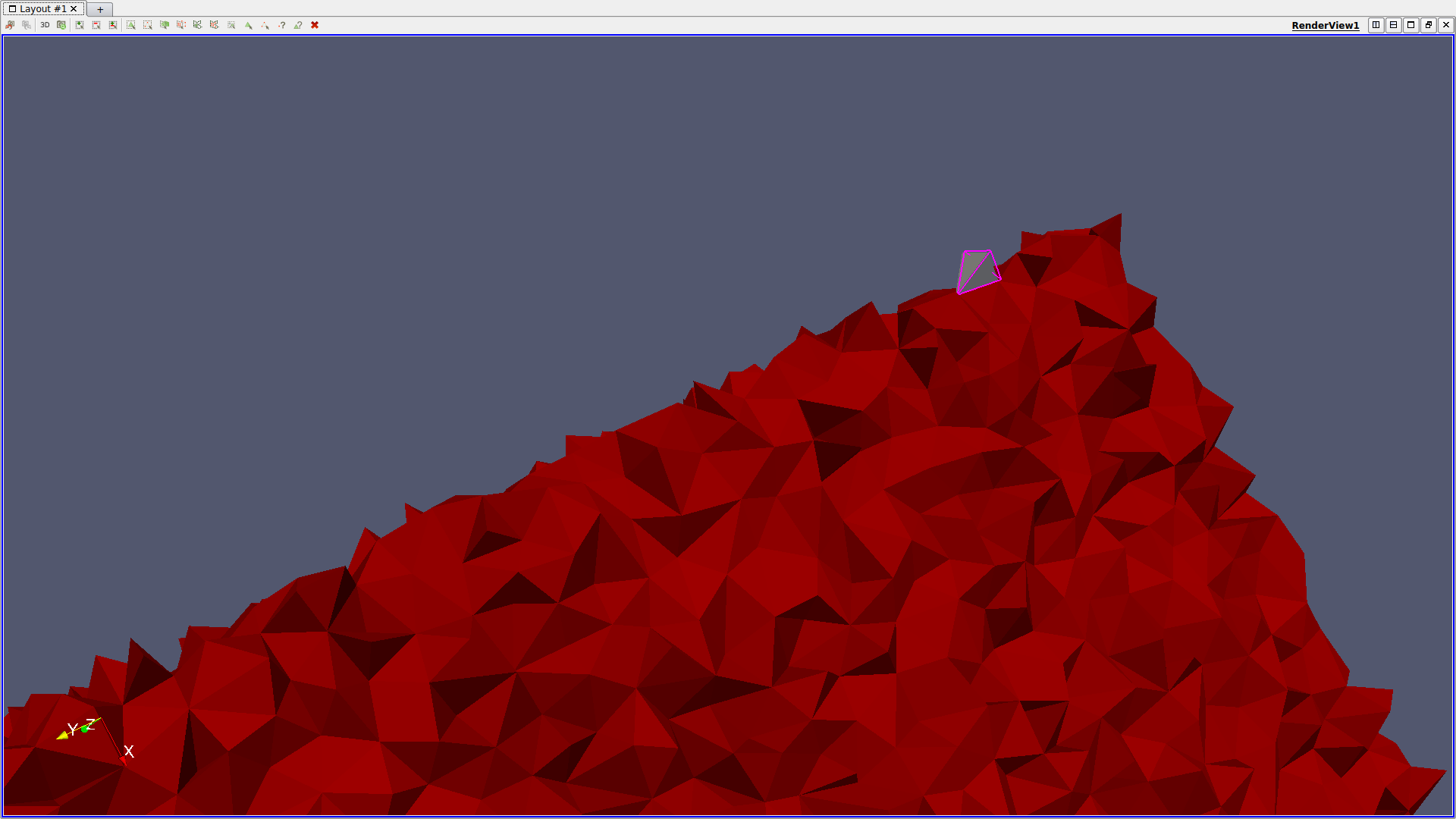

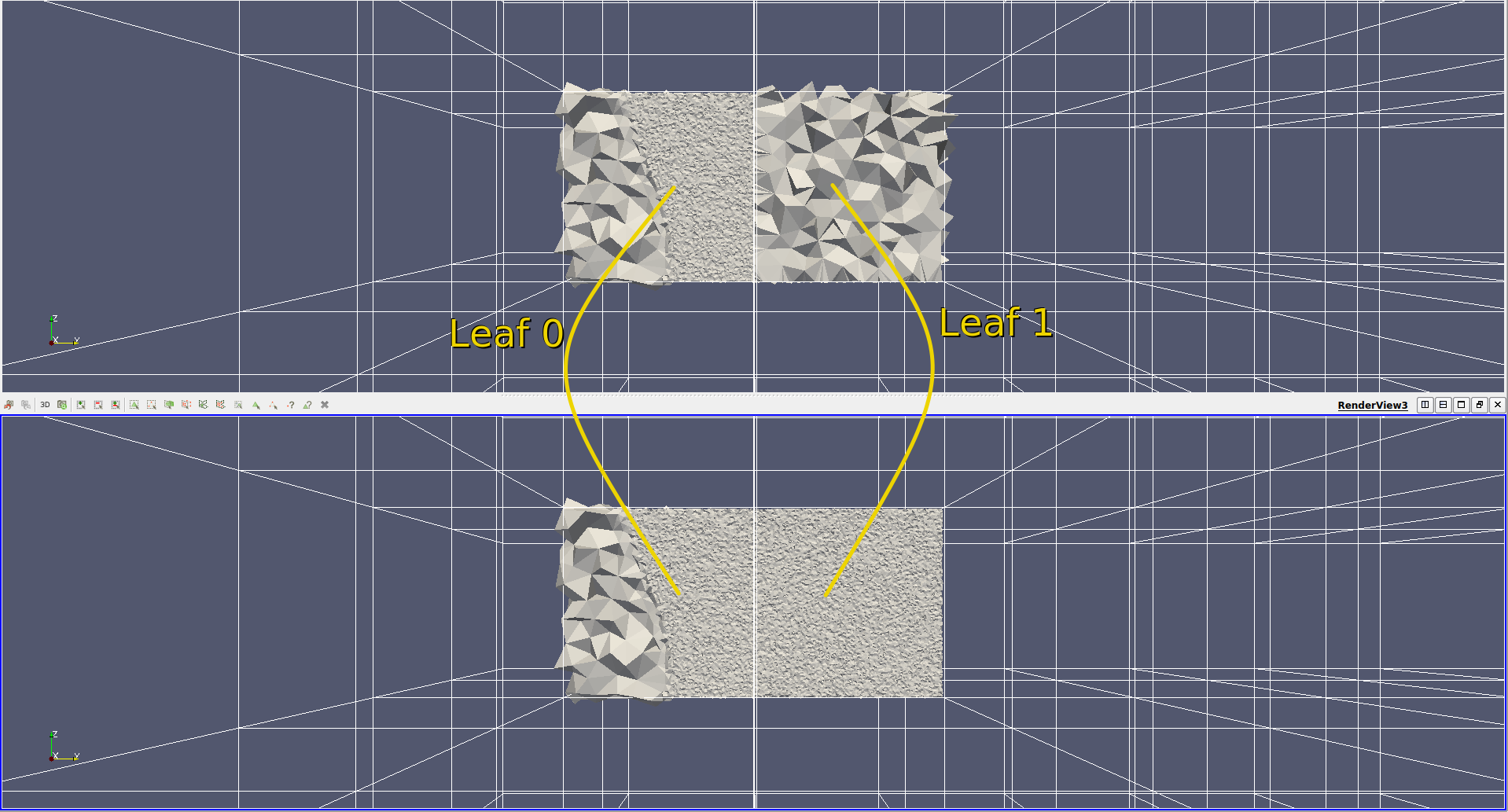

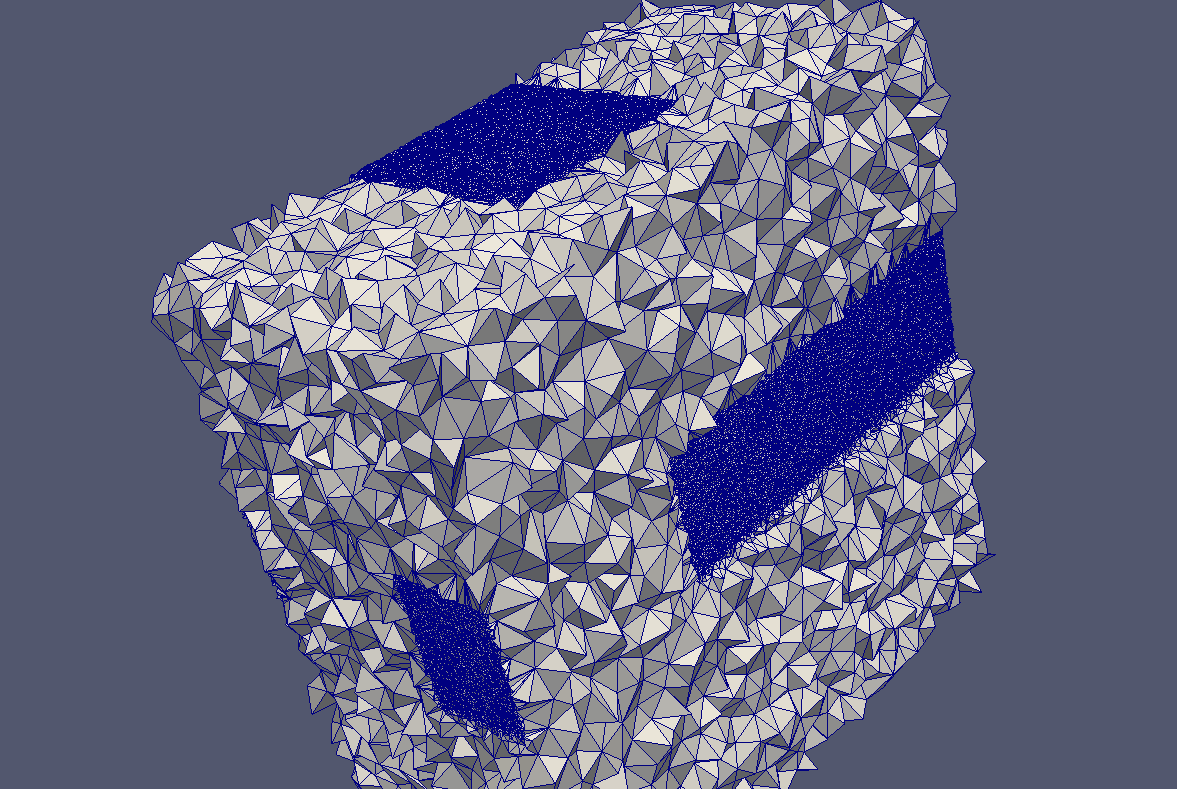

- No reuse of leaves refined by worker nodes. The picture below shows the issue. Two neighbour leaves (0,1) each refined as the main leaf (0 top, 1 bottom) but not refined as a neighor.

- Current algorithm uses neighbour traversal to distribute cells to octree leaving some cells out in some cases. Such a case can happen when a cell is part of an octree leaf based on its circumcenter but

it does not have any neighbour in the same leaf.

- During unpacking the incident cell for each vertex is not set correctly. Specifically, in the case that the initial incident cell is not part of the working unit (Leaf + LVL.1 Neighbours) and thus is not local,

it is set to the infinite cell. This causes PODM to crash randomly for some cases.

- Another issue comes from the way global IDs are updated for each cell's neighbors' IDs. The code that updates the cell's connectivity using global IDs takes the neighbor's pointer, retrieves its global ID and

updates the neighborID field. However, when the neighbour is part of another work unit's leaf and is not local this pointer is NULL. In this case the neighborID field is wrongly reset to the infinite cell ID, which as result, deletes the connectivity information forever.

- The function that unpacks the required leaves before refinement does not discard duplicate vertices. Duplicate vertices will always be present since each leaf is packed and sent individually, and as a result,

neighbouring leaves will include the shared vertices. Because duplicate vertices are not handled, multiple vertex objects are created that are in fact the same point geometrically. Thus, two cells that share a common vertex could have pointers to two different vertex objects and, as a result, each cell views a different state about the same vertex.

Fixes

Interesting Findings

Delta 0.880

15 MPI ranks depth: 3

15 MPI ranks depth: 4

40 MPI ranks / 40 cores depth: 4

160 MPI ranks / 10 cores depth: 4

After Parallel int to pointer

15 MPI ranks depth: 3

15 MPI ranks depth: 4

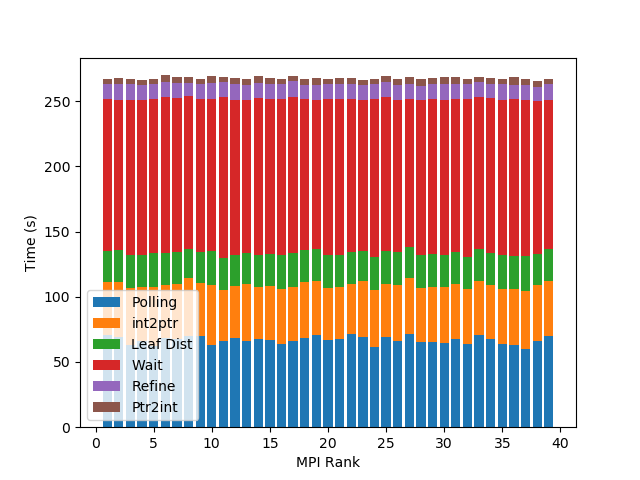

40 MPI ranks depth: 4

160 MPI ranks 10 cores depth: 4

After Parallel int to pointer and leaf distribution

15 MPI ranks depth: 4

40 MPI ranks depth: 4

160 MPI ranks 10 cores depth: 4

After Parallel int to pointer, leaf distribution and extra comm thread

15 MPI ranks depth: 3

15 MPI ranks depth: 4

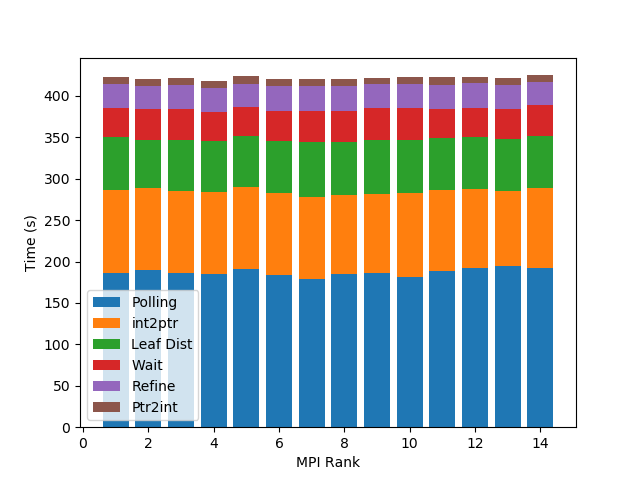

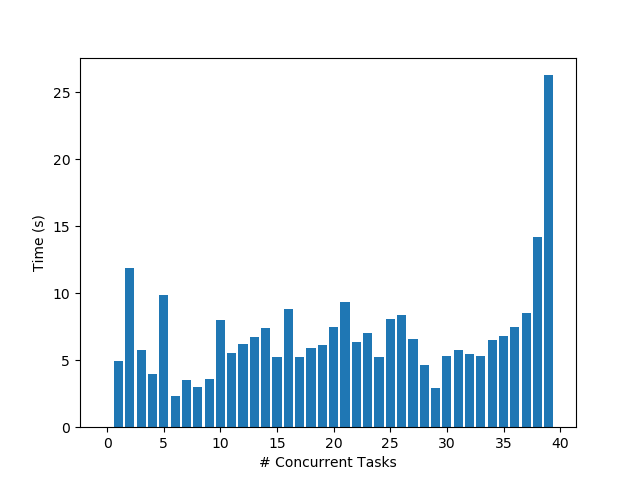

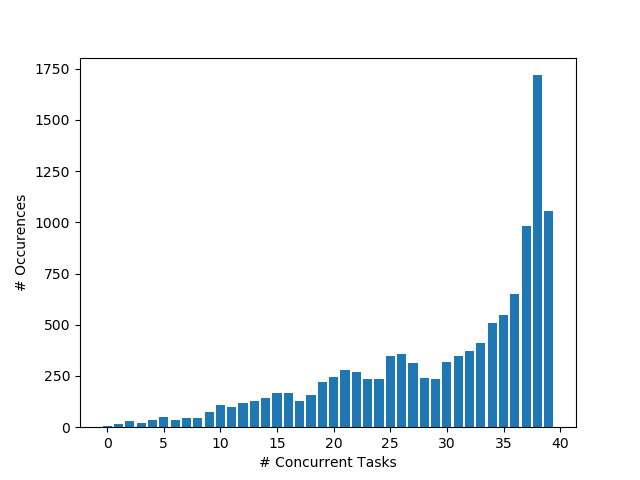

40 MPI ranks depth: 4

160 MPI ranks depth: 4

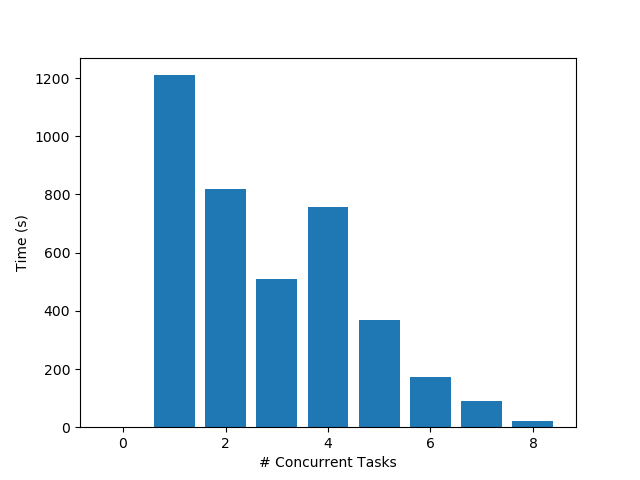

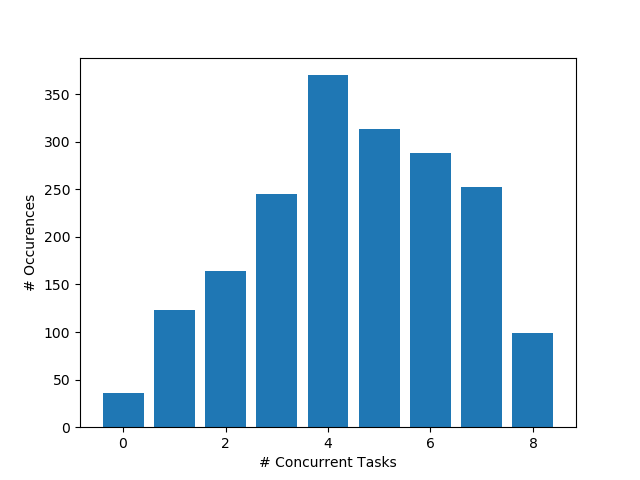

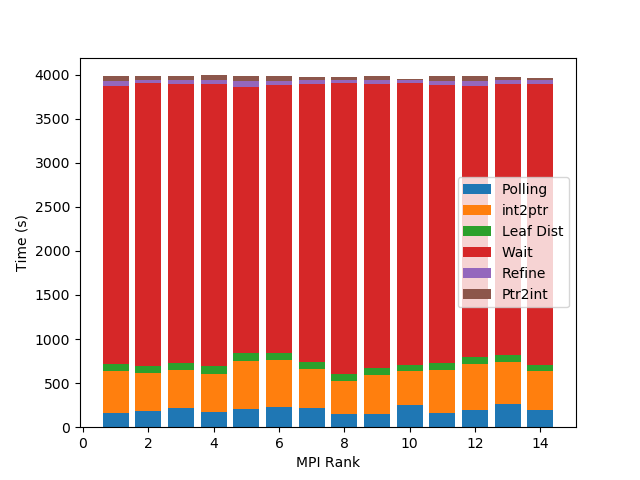

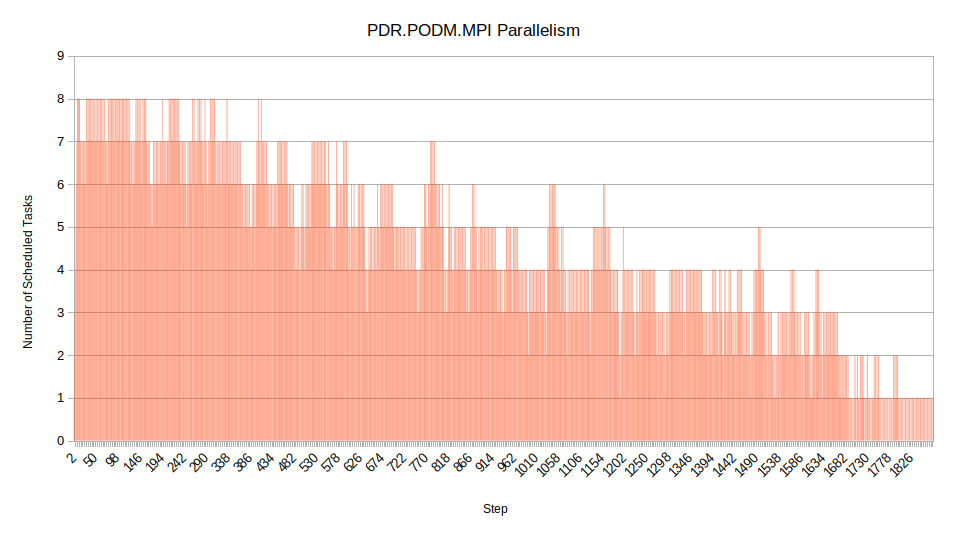

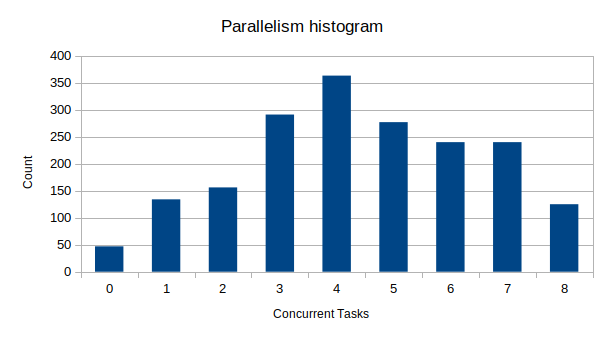

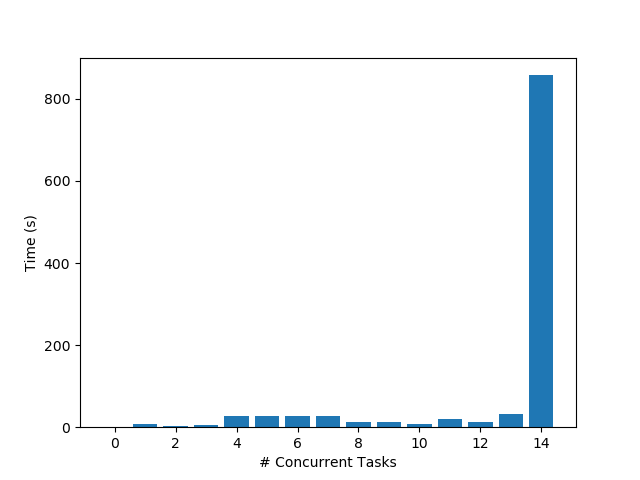

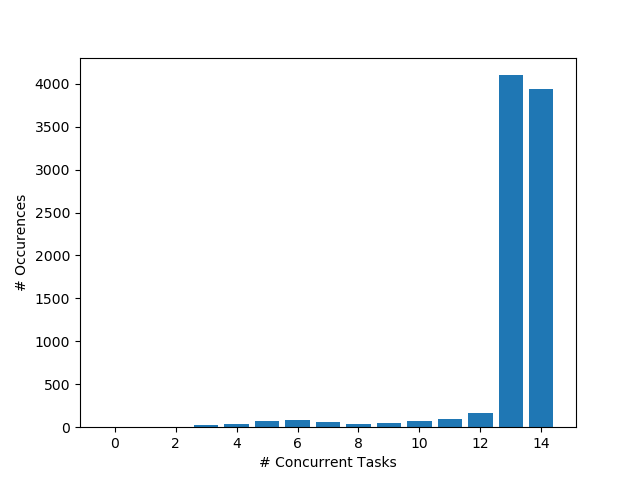

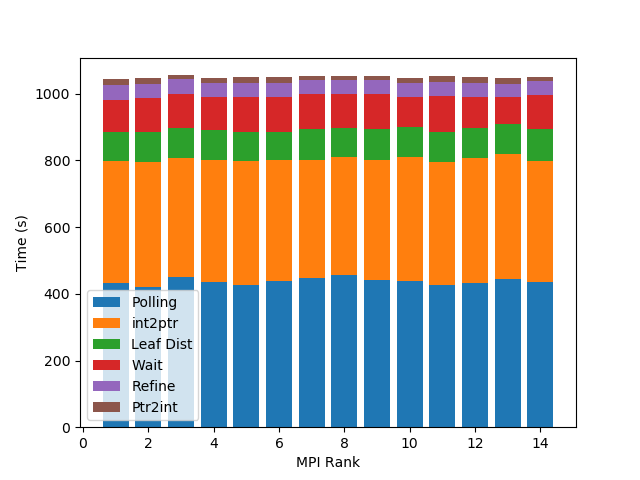

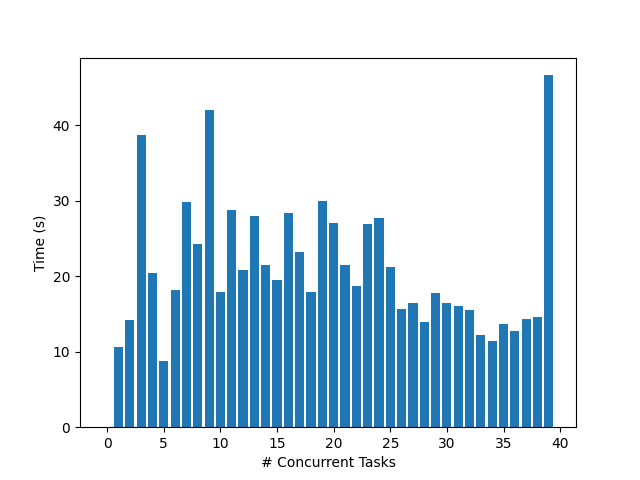

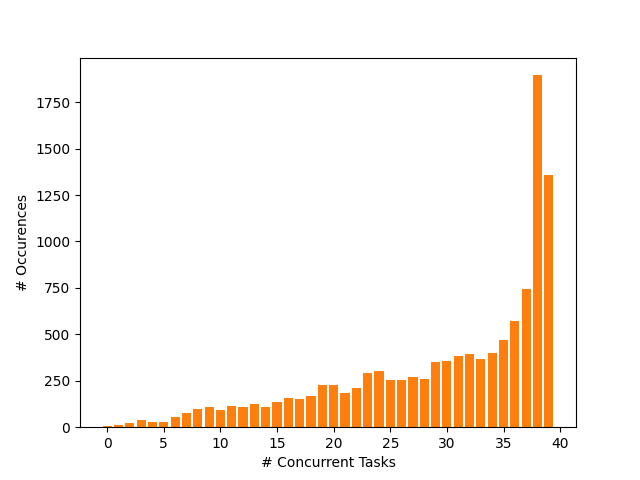

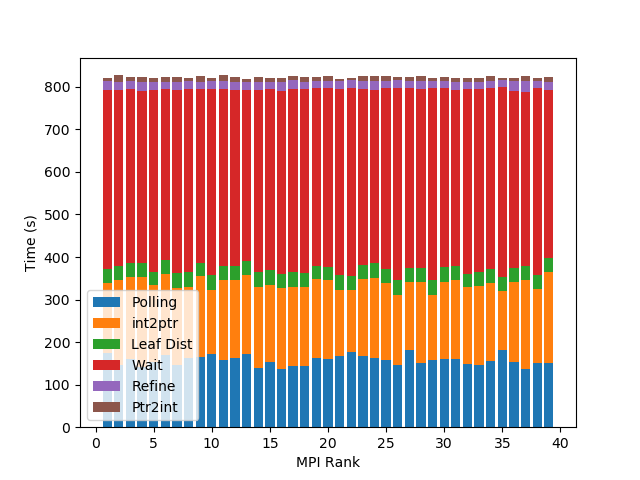

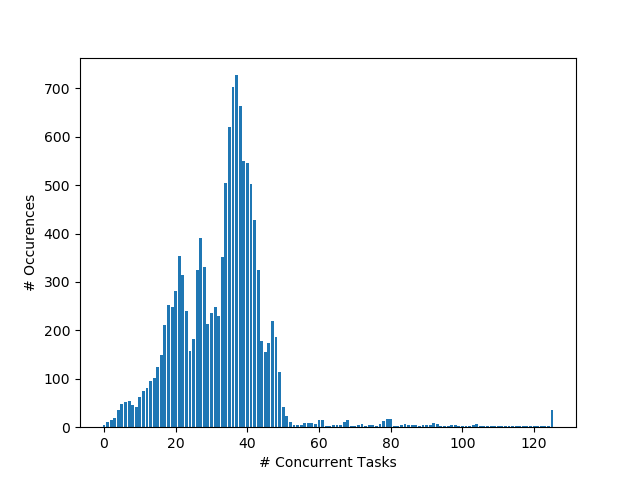

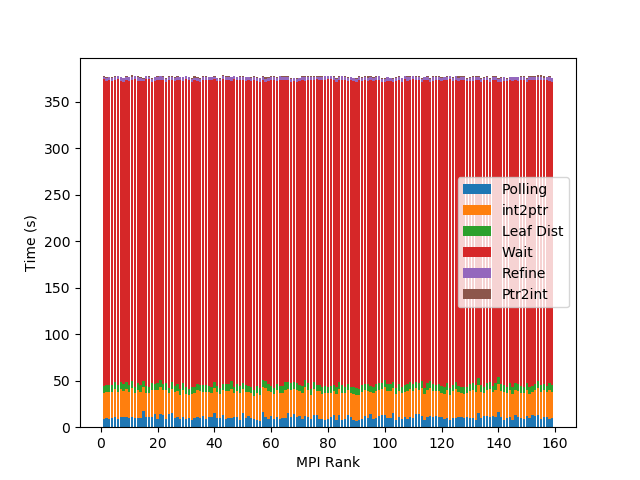

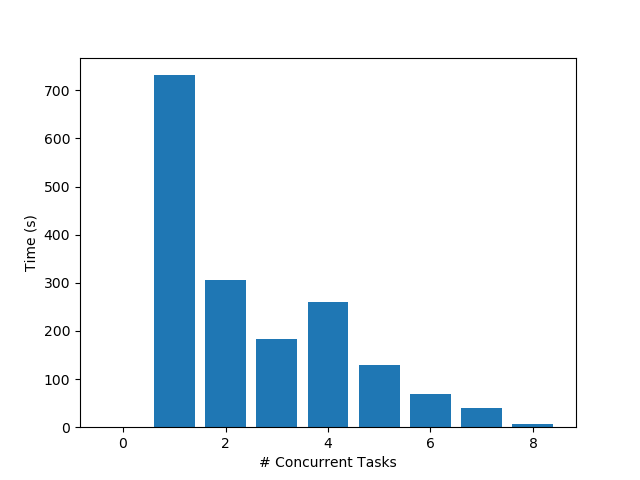

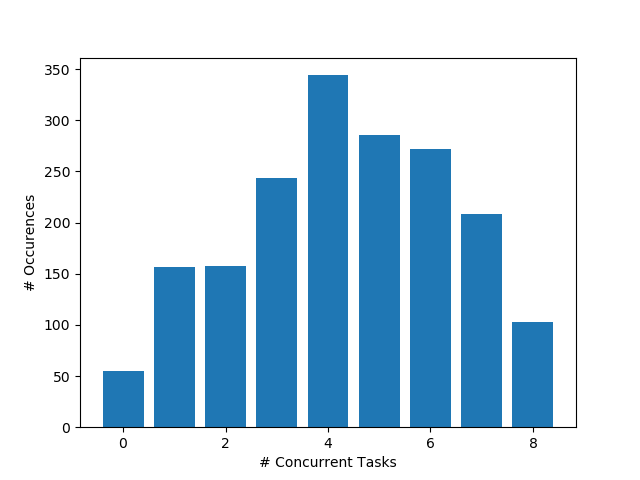

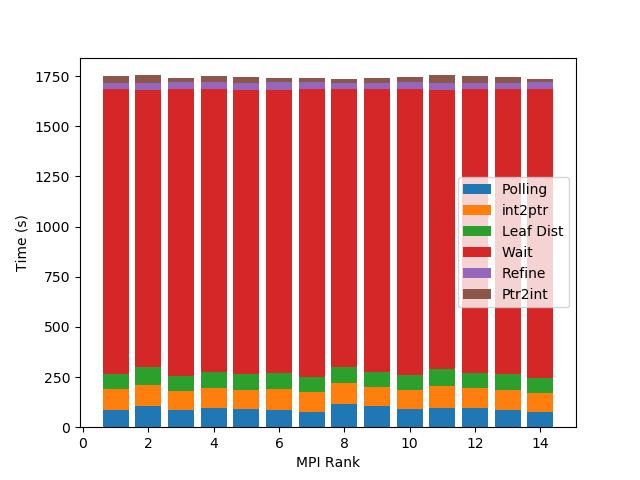

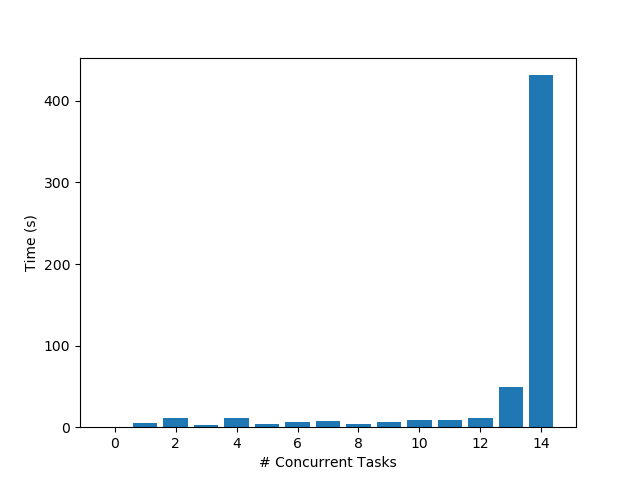

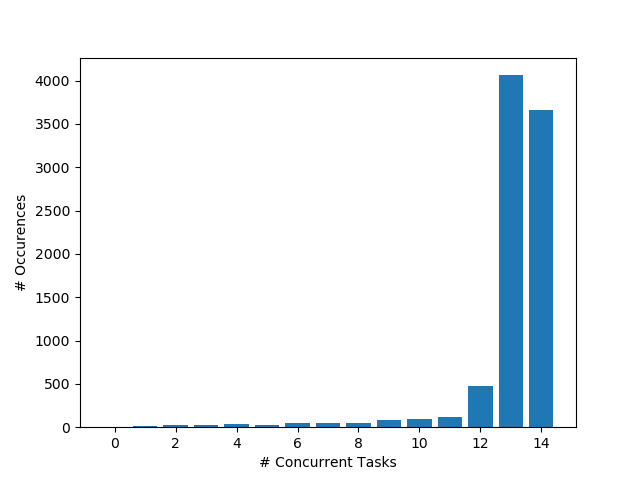

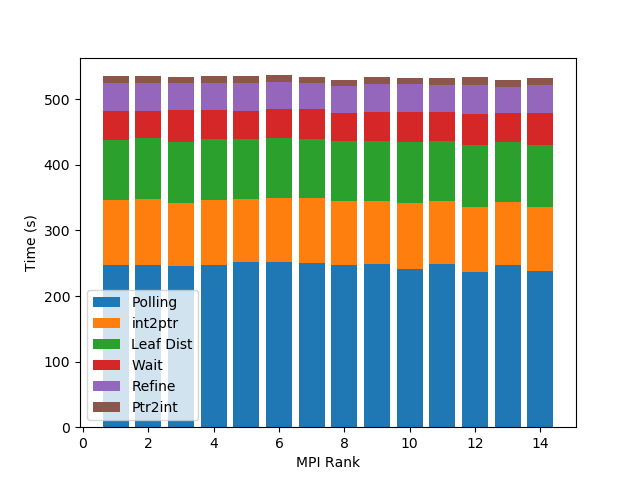

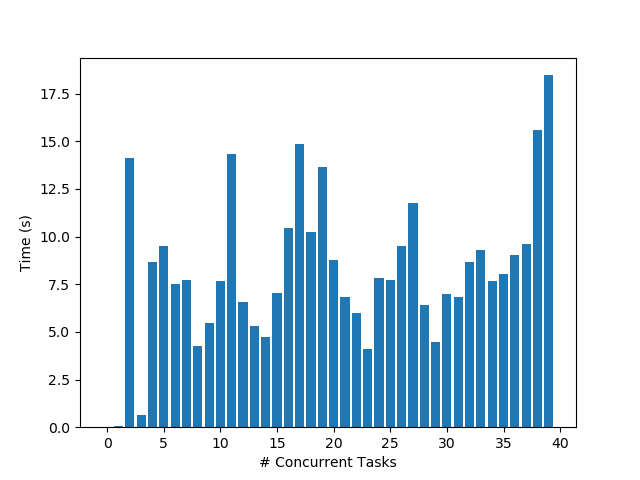

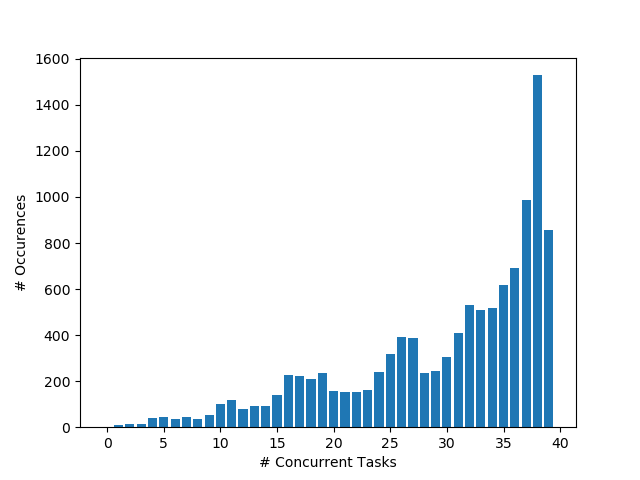

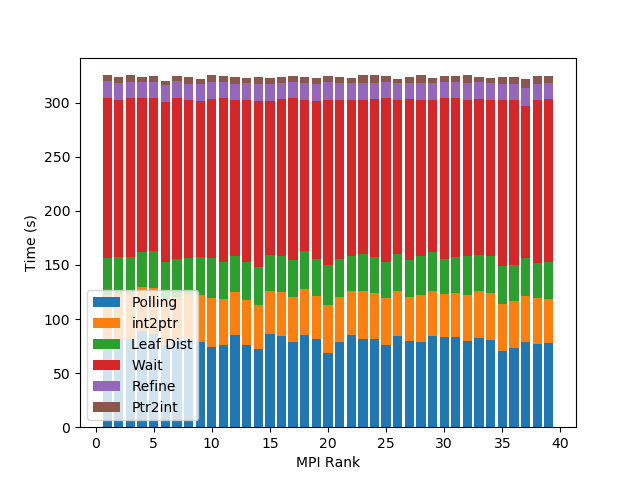

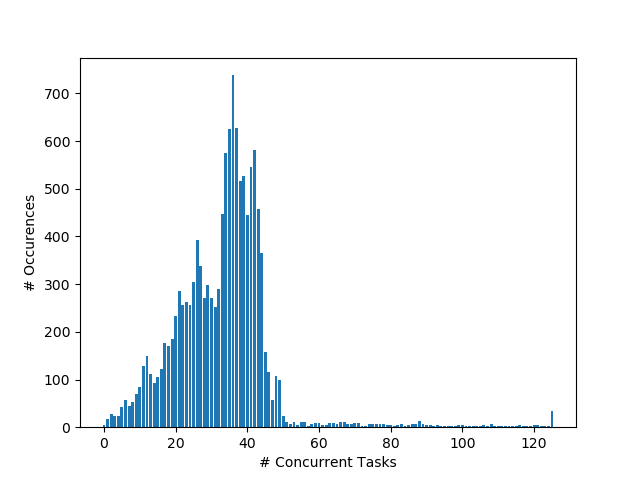

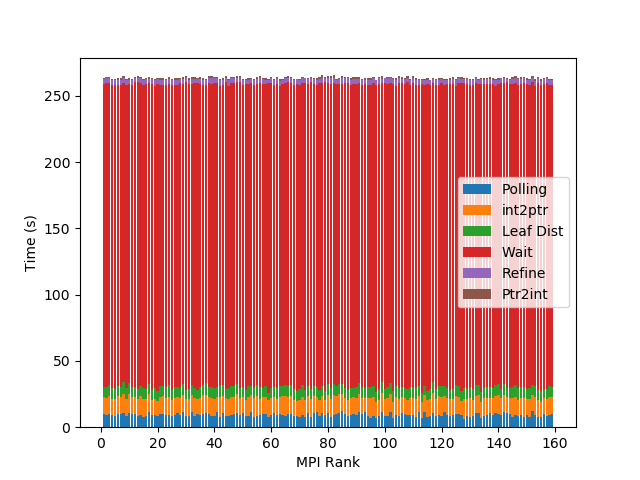

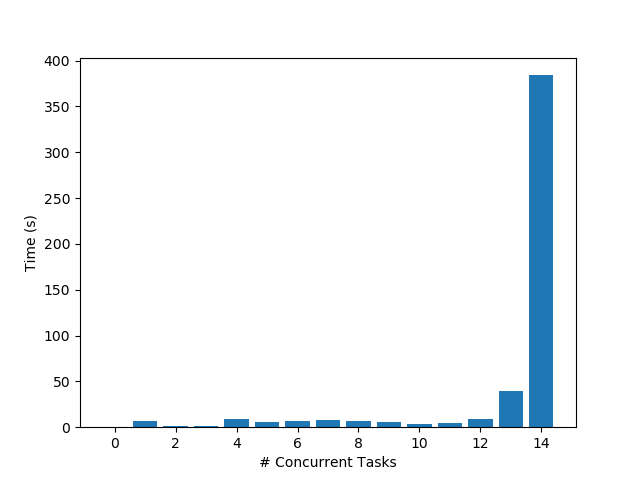

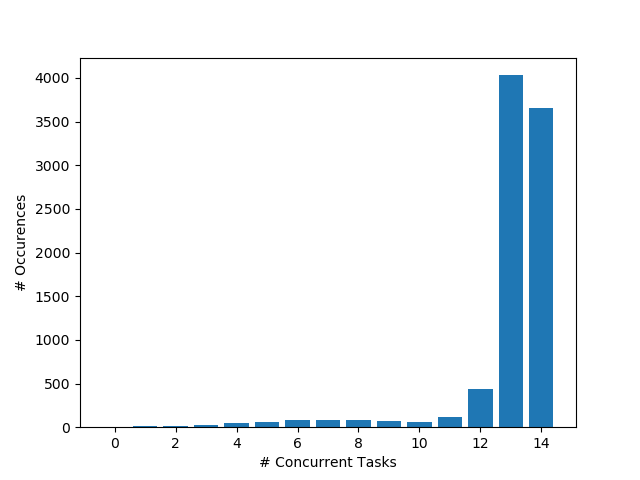

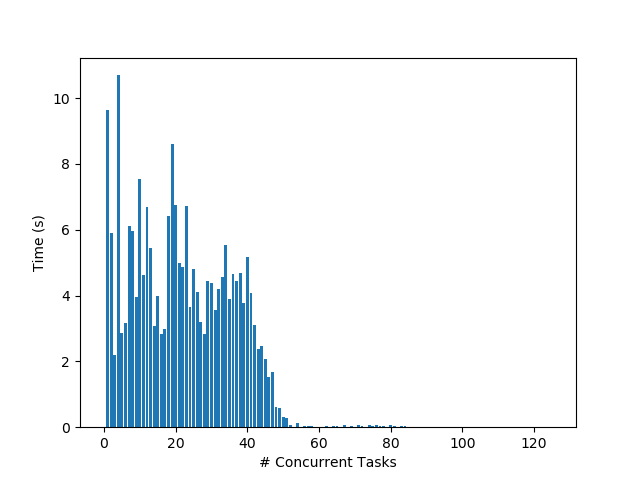

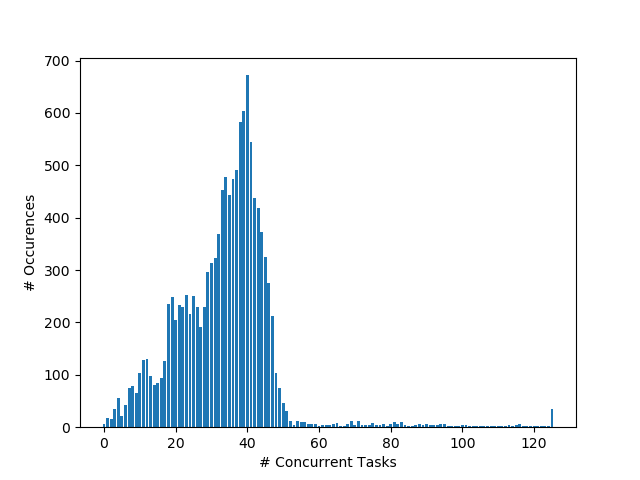

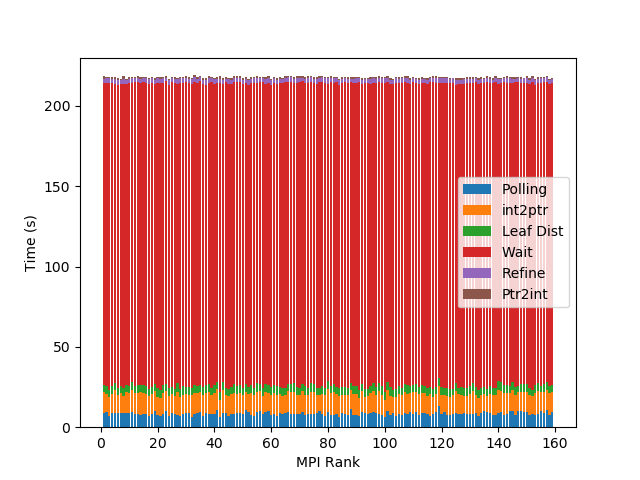

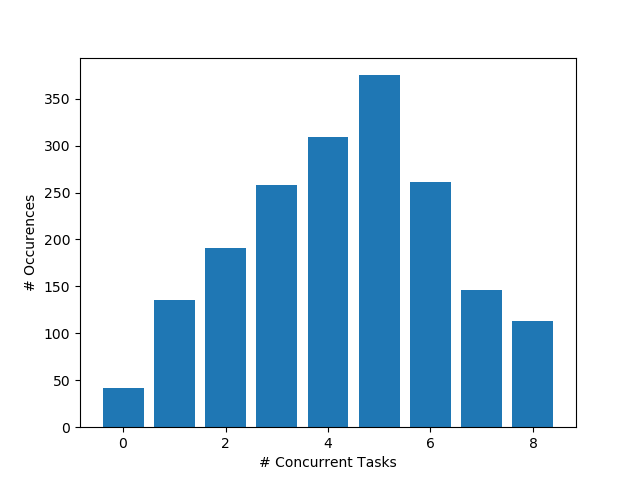

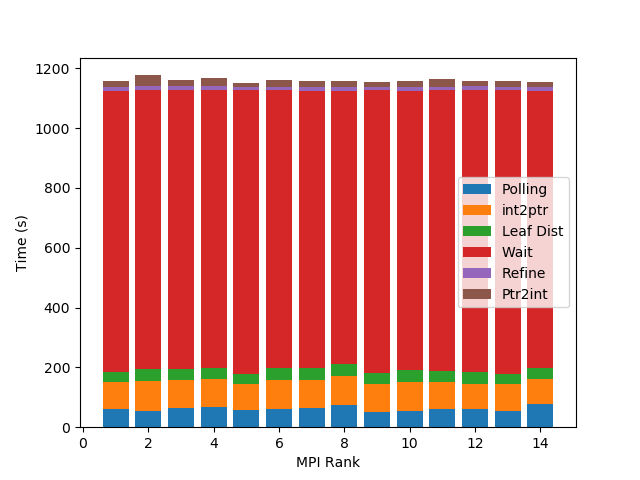

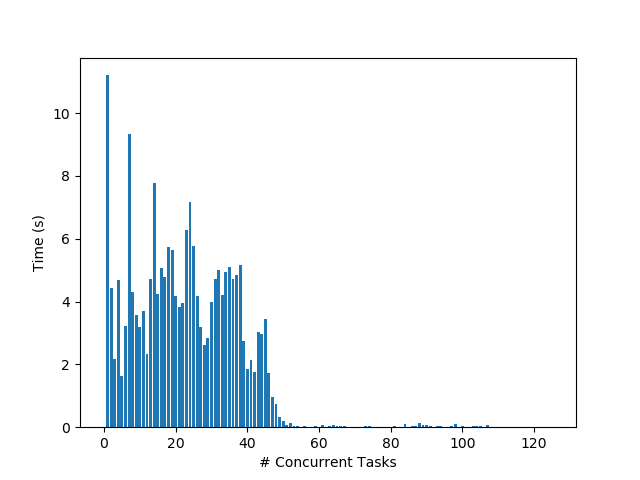

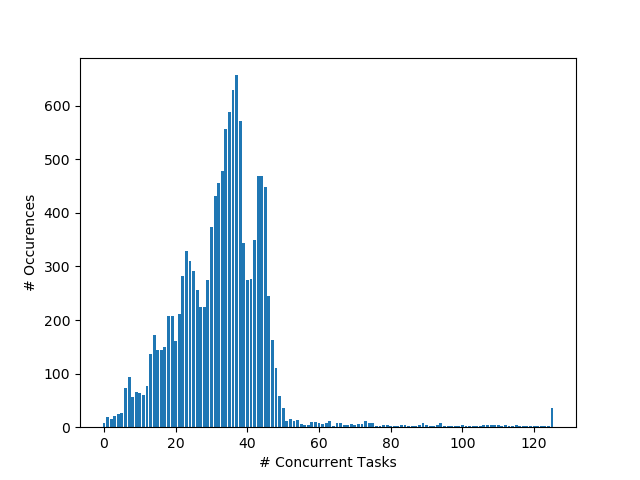

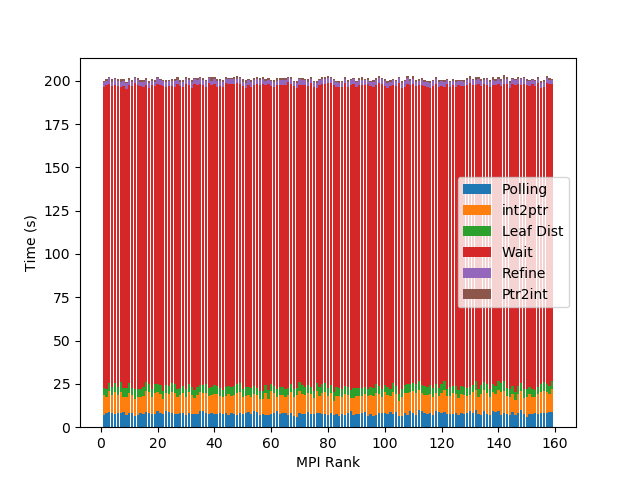

Delta 0.3780

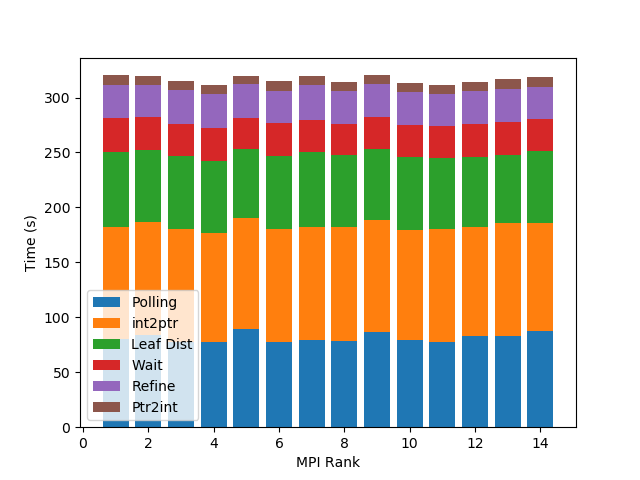

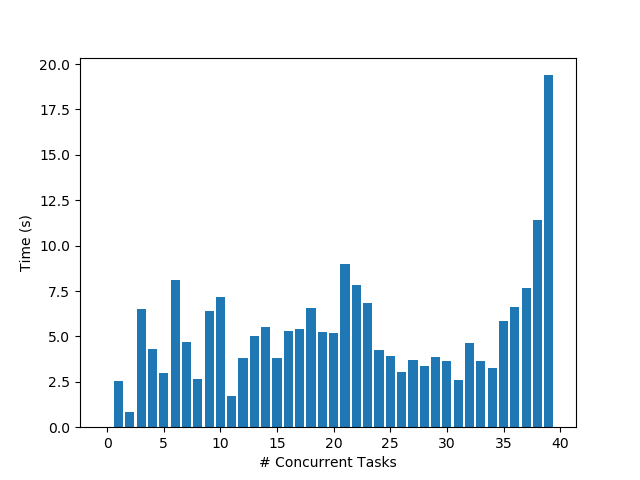

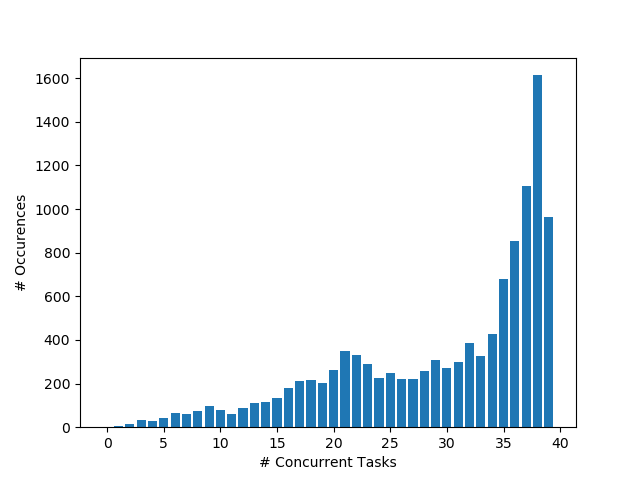

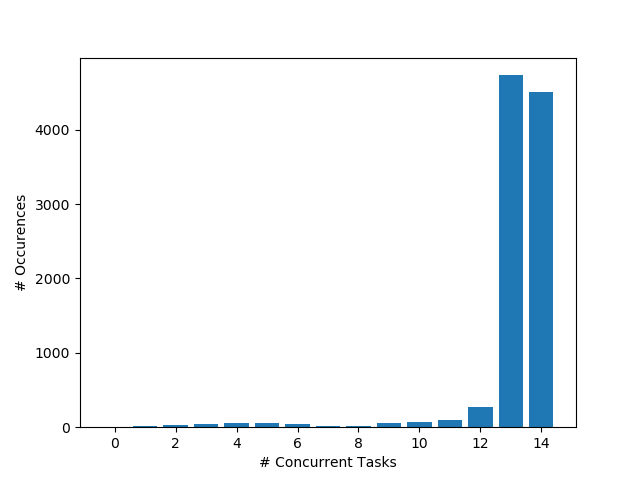

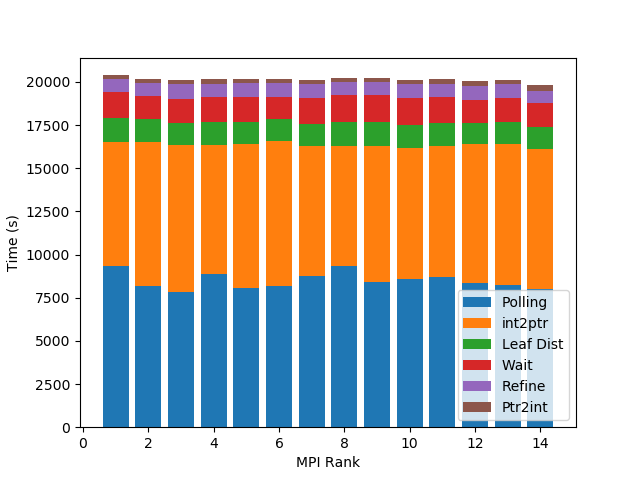

15 MPI ranks depth: 4

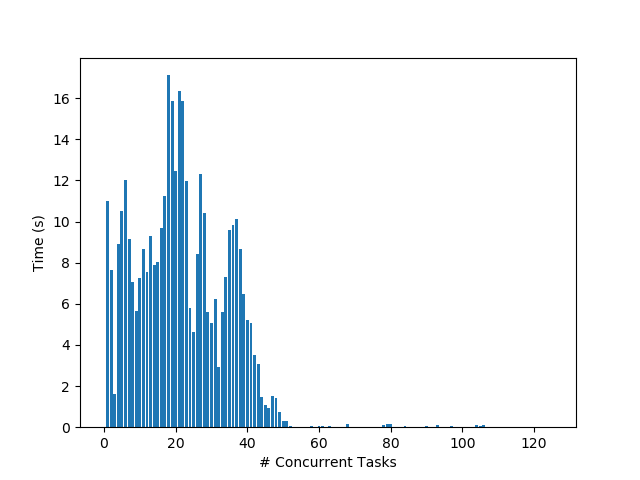

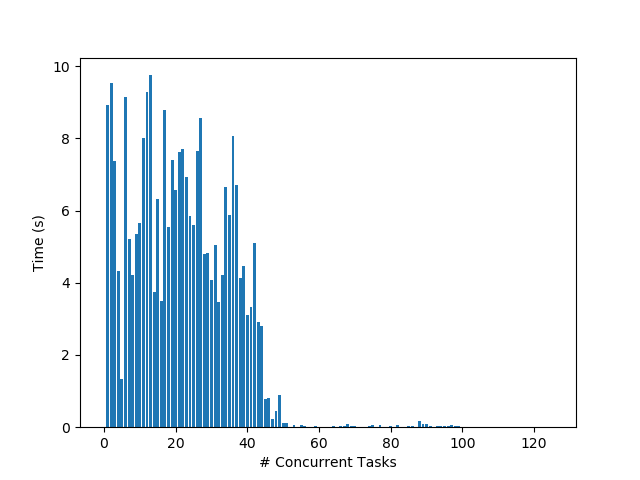

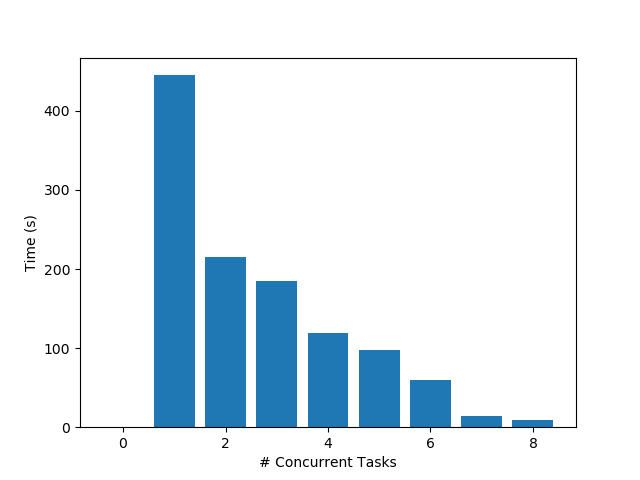

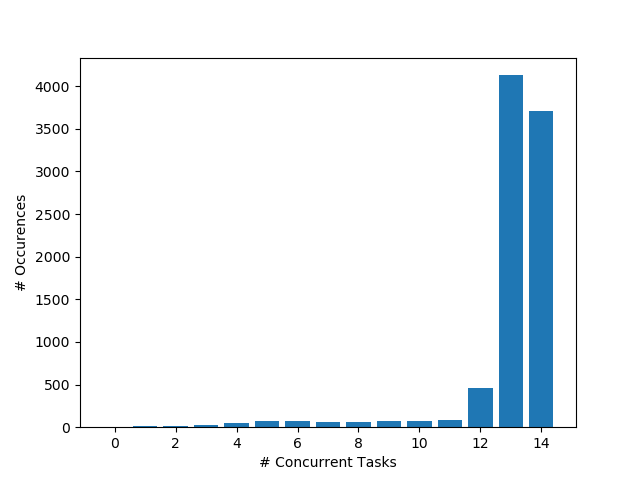

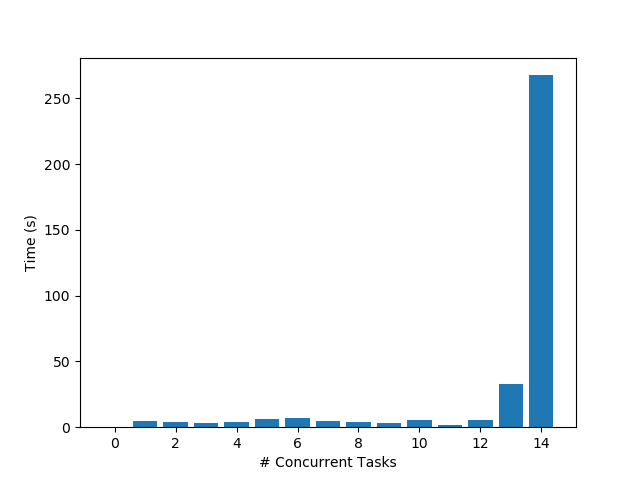

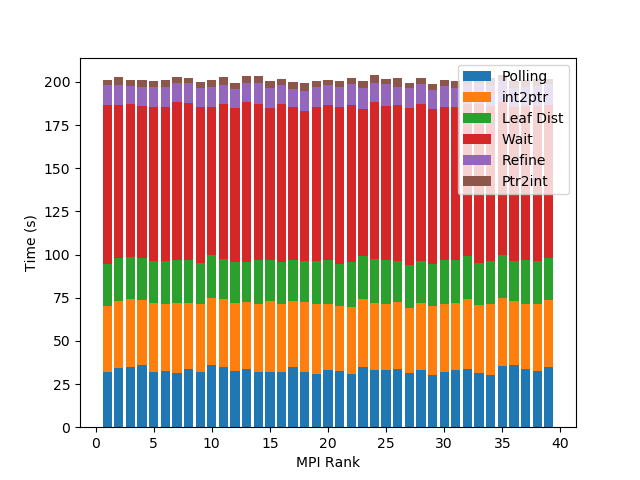

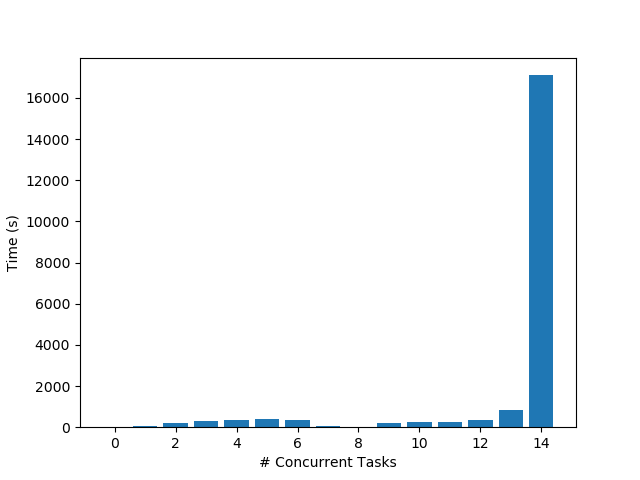

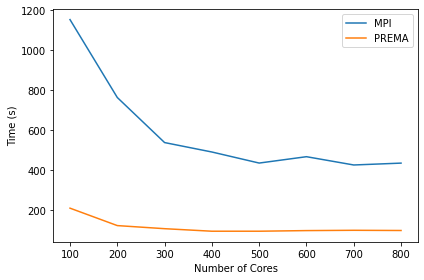

Latest Results

| Cores | Total Time (s) | Number of Elements |

|---|---|---|

| 40 | 90.8 | 49453719 |

| MPI | PREMA | |||

|---|---|---|---|---|

| Cores | Total Time (s) | Number of Elements | Total Time (s) | Number of Elements |

| 100 | 1151.472406 | 49352359 | 208.746450 | 49347855 |

| 200 | 763.70671 | 49357898 | 121.326012 | 49353442 |

| 300 | 537.678638 | 49357092 | 105.812248 | 49351224 |

| 400 | 490.365970 | 49357881 | 93.101481 | 49351626 |

| 500 | 434.921334 | 49347357 | 93.119187 | 49351049 |

| 600 | 466.822803 | 49361647 | 96.243807 | 49345030 |

| 700 | 425.273205 | 49360615 | 97.721654 | 49346798 |

| 800 | 434.638603 | 49349629 | 96.723318 | 49341034 |